Optimizing Metagenomic Binning for Low-Abundance Species: Strategies, Tools, and Clinical Applications

Recovering genomes of low-abundance species from complex metagenomes remains a significant challenge in microbial research.

Optimizing Metagenomic Binning for Low-Abundance Species: Strategies, Tools, and Clinical Applications

Abstract

Recovering genomes of low-abundance species from complex metagenomes remains a significant challenge in microbial research. This article provides a comprehensive guide for researchers and drug development professionals on optimizing binning strategies for these elusive populations. We explore the foundational challenges posed by microbial community complexity and imbalanced species distributions. The article then details state-of-the-art methodological approaches, including specialized algorithms, hybrid binning frameworks, and effective assembler-binner combinations. We further present practical troubleshooting and optimization protocols for handling common issues like strain variation and data imbalance. Finally, we offer a rigorous framework for validating binning quality using established metrics and benchmarking standards, synthesizing key performance insights from leading tools to empower more complete microbiome characterization for biomedical discovery.

The Challenge and Significance of Low-Abundance Species in Metagenomics

Defining Low-Abundance Species and Their Impact on Microbial Ecology and Human Health

Conceptual Foundation: The Microbial Rare Biosphere

What are low-abundance species in microbial ecology?

Low-abundance species, often referred to as the "rare biosphere" or "microbial dark matter," constitute the vast majority of taxonomic units in microbial communities but occur in low proportions relative to dominant species. Most studies define low abundance using relative abundance thresholds below 0.1% to 1% per sample, though standardized definitions remain challenging [1] [2]. These microorganisms represent the "butterfly effect" in microbial ecology—despite their low numbers, they may generate important markers contributing to dysbiosis and ecosystem function [2].

Why are low-abundance species ecologically and clinically important?

Low-abundant microorganisms serve as reservoirs of genetic diversity that contribute to ecosystem resistance and resilience [1]. They include keystone pathogens and functionally distinct taxa that disproportionately impact community structure and function despite their scarcity [3] [2]. Examples include:

- Porphyromonas gingivalis: Associated with periodontitis despite low abundance [2]

- Bacteroides fragilis: A pro-oncogenic bacterium that can remodel healthy gut microbiota [2]

- Methanobrevibacter smithii: A methanogenic archaeon that influences bacterial metabolism in ways that promote dysbiosis [2]

In clinical contexts, low-abundance genomes may be more important than dominant species in classifying disease states. Research on colorectal cancer found that carefully selected subsets of low-abundance genomes could predict cancer status with very high accuracy (0.90-0.98 AUROC) [4].

Technical Challenges & Solutions: Troubleshooting Guide

What are the major technical challenges in studying low-abundance species?

| Challenge Category | Specific Issues | Impact on Low-Abundance Species Recovery |

|---|---|---|

| Sequencing & Assembly | Uneven coverage; fragmented sequences; strain variation [5] | Reduced assembly continuity; preferential loss of rare genomes [6] |

| Bioinformatic Limitations | Arbitrary abundance thresholds (<1%) in analyses [2] | Exclusion of true rare taxa; distorted diversity assessments [1] |

| Reference Databases | Limited genome references for unknown taxa [7] | Inability to classify novel or uncultivated species [4] |

| Experimental Design | Insufficient sequencing depth; sample size limitations [8] | Inadequate coverage for detecting rare community members [6] |

How can we improve detection of low-abundance species in metagenomic studies?

Experimental Protocol: Optimized Assembly and Binning for Rare Taxa

- Sample Preparation: Ensure high-quality input DNA with minimal degradation using fluorometric quantification (e.g., Qubit) rather than UV absorbance alone [9]

- Sequencing Strategy: Employ deeper sequencing to increase probability of capturing rare taxa; consider hybrid approaches combining short-read (Illumina) and long-read (PacBio, Nanopore) technologies [6]

- Co-assembly: Combine reads from multiple samples before assembly to increase sequence depth and improve recovery of low-abundance genomes [4]

- Binning Implementation: Apply specialized binning tools (see Table 2) with parameters optimized for rare taxa recovery

- Quality Assessment: Use CheckM2 or similar tools to evaluate completeness and contamination of recovered MAGs [8]

Which computational methods best address the challenges of low-abundance species?

Unsupervised Learning Approaches The ulrb (Unsupervised Learning based Definition of the Rare Biosphere) method uses k-medoids clustering with the partitioning around medoids (PAM) algorithm to classify taxa into abundance categories (rare, intermediate, abundant) without relying on arbitrary thresholds [1]. This method automatically determines optimal classification boundaries based on the abundance structure of each sample.

Advanced Binning Tools for Rare Taxa Tools like LorBin specifically address challenges in recovering low-abundance species through specialized clustering approaches. LorBin employs a two-stage multiscale adaptive DBSCAN and BIRCH clustering with evaluation decision models, outperforming other binners in recovering high-quality MAGs from rare species by 15-189% [7].

Metagenomic Binning Optimization Framework

How do binning approaches compare for low-abundance species recovery?

Table 1: Performance comparison of binning strategies for low-abundance species

| Binning Strategy | Data Type | Advantages | Limitations | Recommended Use Cases |

|---|---|---|---|---|

| Multi-sample Binning [8] | Short-read, Long-read, Hybrid | Recovers 50-125% more high-quality MAGs than single-sample; better for identifying ARG hosts and BGCs | Computationally intensive; requires multiple samples | Large-scale studies with sufficient samples |

| Single-sample Binning [8] | Short-read, Long-read | Sample-specific; avoids inter-sample chimeras | Lower recovery of rare species; misses cross-sample patterns | Pilot studies; limited samples |

| Co-assembly Binning [8] | Short-read, Long-read | Leverages co-abundance information | Potential chimeric contigs; loses sample variation | Homogeneous communities |

| Hybrid Assembly [6] | Short-read + Long-read | Balances contiguity and accuracy; better genomic context | High misassembly rates with strain diversity; costly | When both gene identification and context are needed |

Which binning tools show best performance for low-abundance taxa?

Table 2: Binning tool performance across data types

| Binning Tool | Key Features | Low-Abundance Performance | Best Data Type |

|---|---|---|---|

| LorBin [7] | Two-stage multiscale clustering; adaptive DBSCAN & BIRCH | Generates 15-189% more high-quality MAGs; excels with imbalanced species distributions | Long-read |

| COMEBin [8] | Data augmentation; contrastive learning; Leiden clustering | Ranks first in multiple data-binning combinations; robust embeddings | Short-read, Hybrid |

| MetaBinner [8] | Ensemble algorithm; multiple feature types | High performance across data types; two-stage ensemble strategy | Short-read, Long-read |

| SemiBin2 [8] | Self-supervised learning; DBSCAN clustering | Improved feature extraction; specialized for long-read data | Long-read |

| ulrb [1] | Unsupervised k-medoids clustering | User-independent rare biosphere definition; avoids arbitrary thresholds | Abundance classification |

Research Reagent Solutions

Essential materials for low-abundance species research

Table 3: Key research reagents and their applications

| Reagent/Resource | Function | Application in Low-Abundance Studies |

|---|---|---|

| CheckM2 [8] | MAG quality assessment | Evaluates completeness/contamination of binned genomes |

| GTDB-tk [4] | Taxonomic classification | Identifies novel/uncultivated species from MAGs |

| MetaWRAP [8] | Bin refinement | Combines bins from multiple tools for improved quality |

| ULRB R package [1] | Rare biosphere definition | Unsupervised classification of rare/abundant taxa |

| Hybrid assembly pipelines [6] | Integrated assembly | Combines short-read accuracy with long-read context |

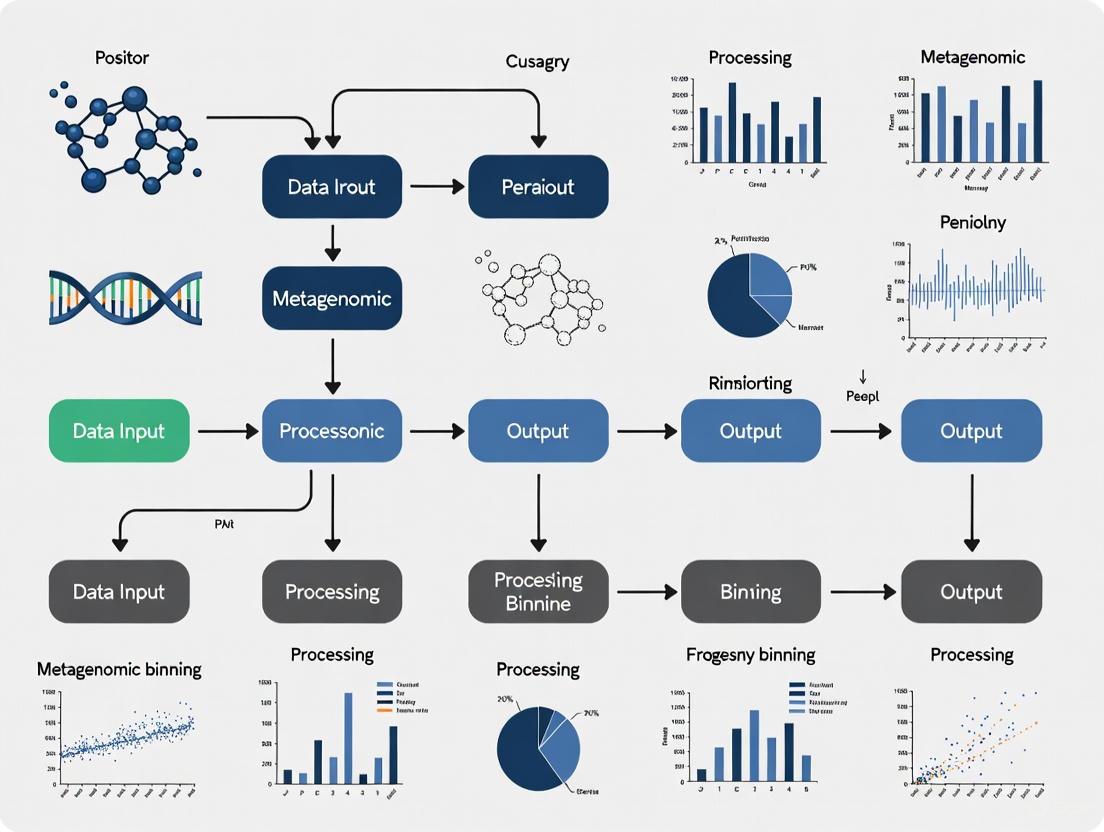

Workflow Visualization

Optimized Workflow for Low-Abundance Species

Frequently Asked Questions

How many samples are needed to adequately capture low-abundance diversity?

Multi-sample binning demonstrates substantially improved recovery of low-abundance species with larger sample sizes. While 3 samples show modest improvements, 15-30 samples enable recovery of 50-125% more high-quality MAGs from rare species [8]. For long-read data, more samples are typically needed to demonstrate substantial improvements due to lower sequencing depth in third-generation sequencing [8].

Can we completely avoid arbitrary thresholds in defining low-abundance species?

While the ulrb method provides an unsupervised alternative to fixed thresholds, some degree of arbitrary decision-making remains in cluster number selection. However, the suggest_k() function in the ulrb package can automatically determine optimal clusters using metrics like average Silhouette score, Davies-Bouldin index, or Calinski-Harabasz index [1].

How do we distinguish truly important low-abundance species from background noise?

Functional distinctiveness provides a framework for identifying low-abundance species with disproportionate ecological impacts. By integrating trait-based analysis with abundance data, researchers can identify taxa with unique functional attributes that may act as keystone species despite low abundance [3]. Genomic context from long-read sequencing further helps distinguish genuine functional capacity from transient background species [6].

Frequently Asked Questions (FAQs)

Q1: What are the primary technical hurdles in metagenomic binning for low-abundance species? The main hurdles are highly fragmented genome assemblies, uneven coverage across species (where a few are dominant and most are rare), and the presence of multiple, closely related strains within a species. These challenges are interconnected; uneven coverage leads to fragmented assemblies, and strain variation further complicates the ability to resolve complete, strain-pure genomes [10] [11] [7].

Q2: Why do my assemblies remain fragmented even with high sequencing depth? Fragmentation often occurs in complex microbial communities due to the presence of shared repetitive regions between different organisms and uneven species abundance. While high depth is beneficial, assemblers can break contigs when they cannot resolve these repetitive regions, especially when coverage information is similar across species. This is particularly problematic for low-abundance species (<1% relative abundance), which naturally yield fewer sequencing reads, resulting in lower local coverage and fragmented assemblies [12] [11] [13].

Q3: How does strain variation interfere with metagenomic binning? Strain variation refers to genetic differences (e.g., single nucleotide variants, gene insertions/deletions) between conspecific organisms. During assembly, sequences from different strains of the same species may fail to merge into a single contig due to these variations. This leads to a fractured representation of the pangenome, making it difficult for binners to group all contigs from the same species together. Consequently, you may recover multiple, incomplete "strain-level" bins instead of a single high-quality metagenome-assembled genome (MAG) [10] [14].

Q4: My binner performs well on dominant species but misses rare ones. Why? Most standard binning tools use features like sequence composition and abundance coverage to cluster contigs. In a typical microbiome with an imbalanced species distribution, the signal from low-abundance species can be obscured by the noise from dominant ones. Furthermore, the contigs from rare species are often shorter and fewer, providing insufficient data for clustering algorithms to confidently assign them to a unique bin [11] [7].

Q5: What is the benefit of a hybrid sequencing approach for overcoming these hurdles? Hybrid sequencing, which combines accurate short reads (e.g., Illumina) with long reads (e.g., PacBio, Oxford Nanopore), leverages their complementary strengths. Long reads span repetitive regions and strain-specific variants, reducing assembly fragmentation. Accurate short reads then correct errors in the long-read assemblies. This synergistic approach produces longer, more accurate contigs, which is the foundation for better binning, especially for strain-aware results [10].

Troubleshooting Guides

Guide 1: Addressing Highly Fragmented Assemblies

Problem: The assembly output consists of many short contigs, and the N50 statistic is low.

Investigation & Solutions:

- Check Assembly Quality: First, use a tool like QUAST to assess assembly metrics. Manually inspect small contigs for low-complexity sequences (e.g., long homopolymer runs like "AAAAA") which can be assembly artifacts. Filter these out before binning [13].

- Re-assemble with a Different Paradigm:

- If you used a De Bruijn graph-based assembler (e.g., MEGAHIT, metaSPAdes) and have high-error long reads, try an Overlap-Layout-Consensus (OLC)-based assembler. OLC methods are more tolerant of sequencing errors and can produce longer contigs from long-read data [12].

- Consider hybrid assemblers like Opera-MS or HybridSPAdes that use both short and long reads to improve continuity [10].

- Adjust Sequencing Strategy: For future experiments, consider investing in higher-quality long reads (e.g., PacBio HiFi) that offer both length and accuracy, significantly improving assembly contiguity [10].

Guide 2: Improving Binning Recovery of Low-Abundance Species

Problem: The binning results contain high-quality MAGs for dominant species but fail to recover genomes from rare taxa.

Investigation & Solutions:

- Use a Specialized Binne: Standard binners may discard low-coverage contigs. Employ tools specifically designed for imbalanced species distributions. For example, LorBin uses a two-stage, multiscale adaptive clustering strategy that has been shown to generate significantly more high-quality MAGs from rare species compared to state-of-the-art tools [7].

- Optimize the Assembler-Binner Combination: The choice of assembler and binner significantly impacts recovery. Benchmarking studies suggest that for low-abundance species, the metaSPAdes-MetaBAT2 combination is highly effective. If strain resolution is the goal, MEGAHIT-MetaBAT2 may be a better choice [11].

- Leverage Complementary Binning Approaches: Some frameworks, like MetaComBin, sequentially combine two binning strategies. It first uses an abundance-based method to separate groups with different coverage, then applies an overlap-based method within each group to distinguish species with similar abundance. This can improve clustering where a single method fails [15].

Guide 3: Achieving Strain-Resolved Metagenome Assembly

Problem: You suspect multiple strains of a species are present, but your bins are chimeric or you cannot separate them.

Investigation & Solutions:

- Employ Strain-Aware Assemblers: Use tools built specifically for this challenge. HyLight is a hybrid approach that uses strain-resolved overlap graphs to accurately reconstruct individual strains, even from low-coverage long-read data. It has demonstrated a ~19% improvement in preserving strain identity [10]. Strainberry is another option that uses long reads to separate haplotypes [10].

- Ensure Sufficient Data Type and Coverage: Strain resolution requires data that captures long-range genetic information. Long-read sequencing (either high-coverage noisy reads or lower-coverage HiFi/Q30+ reads) is almost mandatory, as short reads are too limited to resolve complex strain-level regions [10] [14].

- Apply SNV and Pangenome Analysis: After assembly and binning, use tools that detect single-nucleotide variants (SNVs) or analyze gene content differences across your MAGs to identify and validate strain-level populations within your community [14].

Experimental Protocols & Workflows

Detailed Methodology for Strain-Resolved Hybrid Assembly

This protocol is adapted from the HyLight methodology, which is designed to produce strain-aware assemblies from low-coverage metagenomes [10].

DNA Extraction & Sequencing:

- Perform high-molecular-weight DNA extraction from the microbial sample.

- Conduct both: a. Third-Generation Sequencing (TGS): Sequence on a platform such as PacBio (CLR or HiFi) or Oxford Nanopore (ONT) to generate long reads (~5-20+ kbp). A lower coverage (e.g., 10-15x) can be sufficient in a hybrid context to reduce costs. b. Next-Generation Sequencing (NGS): Sequence on an Illumina platform to generate high-accuracy short reads (2x150 bp or longer) with sufficient coverage (e.g., 30-50x).

Data Preprocessing:

- Long Reads: Perform initial quality check (e.g., NanoPlot). Optional adapter removal and quality filtering.

- Short Reads: Use tools like FastQC for quality control, followed by Trimmomatic or Cutadapt to remove adapters and low-quality bases.

Dual Assembly and Mutual Scaffolding (The HyLight Core):

- Unlike traditional "short-read-first" or "long-read-first" methods, HyLight assembles both datasets independently and then merges them.

- Assemble long reads using an OLC-based assembler, guided by the short reads for error correction.

- Assemble short reads using a De Bruijn graph-based assembler, guided by the long reads for scaffolding.

- Merge the two resulting assemblies into a unified set of scaffolded contigs.

Binning and Strain Validation:

- Bin the final, merged assembly using a binner effective for long reads and strain resolution, such as LorBin [7] or SemiBin2 [7].

- Check the quality of MAGs (completeness, contamination) with CheckM or similar.

- Validate strain separation by mapping reads back to the MAGs and calling SNVs, or by analyzing the presence/absence of accessory genes.

Workflow Diagram: Strain-Resolved Hybrid Assembly

Performance Data and Tool Selection

Table 1: Benchmarking Performance of Binners on Synthetic Datasets

Data derived from benchmarking experiments on the CAMI II dataset, showing the number of high-quality bins (hBins) recovered by different tools across various habitats [7].

| Binner | Airways | Gastrointestinal Tract | Oral Cavity | Skin | Urogenital Tract |

|---|---|---|---|---|---|

| LorBin | 246 | 266 | 422 | 289 | 164 |

| SemiBin2 | 206 | 243 | 344 | 251 | 152 |

| COMEBin | 185 | 219 | 301 | 224 | 142 |

| MetaBAT2 | 162 | 201 | 279 | 198 | 131 |

| VAMB | 151 | 192 | 265 | 187 | 125 |

Table 2: Recommended Assembler-Binner Combinations for Specific Goals

Based on a study evaluating combinations for recovering low-abundance and strain-resolved genomes from human metagenomes [11].

| Research Objective | Recommended Combination | Key Advantage |

|---|---|---|

| Recovering Low-Abundance Species | metaSPAdes + MetaBAT2 | Highly effective at clustering contigs from species with <1% abundance. |

| Recovering Strain-Resolved Genomes | MEGAHIT + MetaBAT2 | Excels at separating contigs from closely related conspecific strains. |

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials and Tools for Metagenomic Binning Optimization

| Item | Function & Application |

|---|---|

| PacBio HiFi Reads | Long-read sequencing technology providing high accuracy (>99.9%) and length (typically 10-20 kbp). Ideal for resolving repetitive regions and strain variants without the need for hybrid correction [10]. |

| Oxford Nanopore Q30+ Reads | Latest generation of nanopore sequencing offering improved raw read accuracy. Provides the longest read lengths, crucial for spanning complex genomic regions and linking strain-specific genes [10]. |

| HyLight Software | A hybrid metagenome assembly approach that implements mutual support of short and long reads. It is optimized for strain-aware assembly from low-coverage data, reducing costs while improving contiguity [10]. |

| LorBin Software | An unsupervised deep-learning binner specifically designed for long-read metagenomes. It excels at handling imbalanced species distributions and identifying novel/unknown taxa, recovering significantly more high-quality MAGs [7]. |

| metaSPAdes Assembler | A metagenomic assembler based on the De Bruijn graph paradigm. Effective for complex communities and often part of the best-performing pipeline for recovering low-abundance species [12] [11]. |

| MEGAHIT Assembler | An efficient and memory-efficient NGS assembler, also based on De Bruijn graphs. Known for its effectiveness in assembling large metagenomic datasets and its utility in strain-resolved analyses [11]. |

| MetaBAT2 Binner | A popular binning algorithm that uses sequence composition and abundance to cluster contigs into MAGs. Forms a high-performing combination with several assemblers for specific goals [11]. |

The Critical Role of Binning in Accessing the Microbial 'Dark Matter'

Troubleshooting Guides and FAQs

Common Binning Problems and Solutions

| Problem | Description | Potential Solutions |

|---|---|---|

| High Complexity [5] | Samples contain DNA from many organisms, increasing data complexity. | Use tools like LorBin or BASALT designed for complex, biodiverse environments. [7] [16] |

| Fragmented Sequences [5] | Assembled contigs are short and broken, complicating bin assignment. | Utilize long-read sequencing technologies to generate longer, more continuous contigs. [7] |

| Uneven Coverage [5] | Some genomes are highly abundant, while others are rare. | Employ binners with specialized clustering algorithms (e.g., LorBin's multiscale adaptive DBSCAN) to handle imbalanced species distributions. [7] |

| Strain Variation [5] | Significant genetic variation within a species blurs binning boundaries. | Leverage tools like BASALT that use neural networks and core sequences for refined, high-resolution binning. [16] |

| Low-Abundance Species [11] | Genomes representing <1% of the community are difficult to recover. | Optimize the assembler-binner combination (e.g., metaSPAdes-MetaBAT2 for low-abundance species). [11] |

Frequently Asked Questions (FAQs)

Q1: What is the fundamental difference between metagenomic binning and profiling?

- Binning is the process of grouping DNA sequences (contigs) into bins that ideally represent individual microbial genomes or taxonomic groups. [17] The output is a set of Metagenome-Assembled Genomes (MAGs).

- Profiling estimates the relative abundance or frequency of known taxa in a community based on the sequence sample. Its main output is a vector of relative abundances. [17]

Q2: My binner struggles with unknown species not in any database. What are my options? Use unsupervised or self-supervised binning tools that do not rely on reference genomes. For example:

- LorBin uses an unsupervised deep-learning approach with a self-supervised variational autoencoder to handle unknown taxa effectively. [7]

- BASALT performs binning and refinement based on sequence features like coverage and tetranucleotide frequency without requiring a priori knowledge of the species present. [16]

Q3: Which tool combinations are recommended for recovering low-abundance species and strains? The choice of assembler and binner combination significantly impacts results. Based on benchmarking: [11]

| Research Goal | Recommended Combination |

|---|---|

| Recovering low-abundance species (<1%) | metaSPAdes assembler + MetaBAT 2 binner |

| Recovering strain-resolved genomes | MEGAHIT assembler + MetaBAT 2 binner |

Q4: How can I objectively evaluate the quality of my recovered MAGs?

- Use standardized metrics like completeness and contamination as defined by the Minimum Information about a Metagenome-Assembled Genome (MIMAG) standard. [16]

- Tools like CheckM can calculate these metrics by using single-copy marker genes. [5] [16]

- For a comprehensive benchmark, use the AMBER assessment package, which is also used by the CAMI challenge to evaluate binning performance. [17]

Quantitative Performance of Advanced Binners

The table below summarizes the performance of modern binning tools as reported in benchmarking studies, demonstrating their role in accessing microbial "dark matter."

| Binning Tool | Key Innovation | Reported Performance Gain | Strength in Low-Abundance/Novel Taxa |

|---|---|---|---|

| LorBin [7] | Two-stage multiscale adaptive clustering (DBSCAN & BIRCH) with evaluation decision models. | Recovers 15–189% more high-quality MAGs than state-of-the-art binners. | Identifies 2.4–17 times more novel taxa. Excels in imbalanced, species-rich samples. |

| BASALT [16] | Binning refinement using multiple binners/thresholds, neural networks, and gap filling. | Produces up to ~30% more MAGs than metaWRAP from environmental data. | Increases recovery of non-redundant open-reading frames by 47.6%, revealing more functional potential. |

| SemiBin2 [7] | Self-supervised contrastive learning, extended to long-read data with DBSCAN. | A strong competitor, but outperformed by LorBin in high-quality MAG recovery. | Effectively handles long-read data for improved contiguity. |

Experimental Protocols for Key Studies

Protocol 1: Benchmarking Binning Performance with CAMI Datasets

This protocol outlines how the performance of advanced binners like LorBin and BASALT is typically evaluated, allowing for reproducible comparisons. [7] [16]

- Input Data Acquisition: Use the Critical Assessment of Metagenome Interpretation (CAMI) datasets. These are synthetic metagenomes created from hundreds of genomes (often unpublished) to simulate different habitats with known gold standard genomes. [17]

- Assembly: Generate metagenomic assemblies from the CAMI sequencing reads. For short-read data, use assemblers like metaSPAdes or MEGAHIT. For hybrid (short+long read) data, tools like OPERA-MS can be used. [16]

- Binning Execution: Run the binning tools (e.g., LorBin, BASALT, VAMB, metaWRAP) on the assembled contigs using their recommended parameters and workflows.

- Quality Assessment and Comparison:

- Calculate completeness and contamination for each recovered bin against the CAMI gold standard genomes using tools like CheckM or CAMI's AMBER. [17] [16]

- Classify bins as high-quality based on community standards (e.g., MIMAG: completeness ≥90%, contamination ≤5%). [16]

- Compare the total number of high-quality MAGs, clustering accuracy (e.g., Adjusted Rand Index), and F1 score across different binners. [7]

Protocol 2: Recovering Microbial Dark Matter from Extreme Environments

This protocol is inspired by the study that recovered 116 Microbial Dark Matter (MDM) MAGs from hypersaline microbial mats. [18]

- Sample Collection and Sequencing: Conduct triplicate sampling from the target environment (e.g., hypersaline mats). Perform shotgun metagenomic sequencing to a depth of ~70 million reads per sample. [18]

- Metagenome Assembly and Binning: Assemble the sequencing reads into contigs. Use a powerful binning toolkit like BASALT or LorBin to reconstruct MAGs from the assembly.

- Identification of Microbial Dark Matter: Taxonomically classify all recovered MAGs. MAGs that cannot be classified to known archaeal or bacterial lineages at a certain taxonomic level (e.g., phylum or class) are considered MDM. [18]

- Functional Annotation of MDM:

- Annotate the genes in the MDM MAGs using databases like KEGG and COG.

- Manually inspect key metabolic pathways to infer ecological roles. Look for genes involved in:

- Carbon fixation (e.g., RuBisCO genes)

- Sulfur metabolism (e.g., SOX gene complex)

- Nitrogen cycling (e.g., nitrogenase

nifgenes for fixation, nitrite reductasenirgenes for denitrification) [18]

The Scientist's Toolkit: Essential Research Reagents & Materials

| Item | Function in Metagenomic Binning |

|---|---|

| Long-Read Sequencer (PacBio/Oxford Nanopore) | Generates long sequencing reads, enabling more continuous assemblies and better recovery of low-abundance genomes. [7] |

| MetaBAT 2 [5] [11] | A widely used, accurate, and flexible binning algorithm that employs tetranucleotide frequency and coverage depth. Often used in combination with various assemblers. |

| CheckM [5] | A software tool that assesses the quality of MAGs by estimating completeness and contamination using a set of single-copy marker genes conserved in bacterial and archaeal lineages. |

| CAMI Benchmarking Datasets [17] | Synthetic metagenomic datasets with known gold standard genomes. Essential for objectively evaluating, comparing, and benchmarking the performance of binning methods. |

| Variational Autoencoder (VAE) | A type of deep learning model used in binners like LorBin to efficiently extract compressed, informative features (embeddings) from contig k-mer and abundance data. [7] |

Workflow and Relationship Visualizations

Binning Optimization for Microbial Dark Matter

From Binning to Functional Insights

How Imbalanced Species Distributions in Natural Microbiomes Complicate Binning

Frequently Asked Questions (FAQs)

Q1: What exactly is "imbalanced species distribution" in a microbiome, and why is it a problem for binning?

Imbalanced species distribution refers to the natural composition of microbial communities where a few dominant species coexist with a large number of rare, low-abundance species [19]. This is a fundamental characteristic of natural microbiomes, where most species are present in low quantities. For binning, this creates a major challenge because the sequencing coverage (the number of DNA reads representing a genome) is directly tied to a species' abundance. Algorithms struggle to distinguish the subtle signals from rare species from background noise, often leading to their genomes being fragmented, incorrectly merged with other rare species, or missed entirely [19] [20].

Q2: My binner works well on mock communities but performs poorly on my environmental sample. Could imbalanced distribution be the cause?

Yes, this is a common issue. Mock communities are often artificially constructed with balanced species abundances, which simplifies the binning process. Natural environmental samples, however, are inherently imbalanced [19]. State-of-the-art binners like LorBin are specifically designed to address this by using multiscale clustering algorithms that are more sensitive to the subtle patterns of low-abundance organisms [19]. If your tool is optimized for balanced data, its performance will likely decline on a natural, imbalanced sample.

Q3: What are the specific output signs that imbalanced distribution is affecting my binning results?

You can look for several key indicators:

- A high number of fragmented, incomplete genomes (low completeness scores).

- A large proportion of contigs that remain un-binned.

- Bins with abnormally low coverage, suggesting they represent rare species.

- Bins with widely varying coverage levels, which can indicate that contigs from multiple rare species have been incorrectly grouped together because the algorithm could not distinguish them [5].

Q4: Beyond choosing a better binner, what experimental strategies can help mitigate this issue?

Increasing sequencing depth is a direct way to capture more reads from low-abundance species, thereby improving their signal-to-noise ratio [21]. Furthermore, leveraging long-read sequencing technologies (e.g., PacBio, Oxford Nanopore) can produce longer contigs. These longer sequences provide more features (e.g., k-mers, genomic context) for the binning algorithm to use, making it easier to correctly group sequences from the same genome, even when coverage is low [19] [22].

Troubleshooting Guide: Identifying and Solving Binning Problems from Imbalanced Data

Problem: Poor Recovery of High-Quality Genomes from Low-Abundance Species

Issue: Your analysis yields very few or no high-quality Metagenome-Assembled Genomes (MAGs) from rare community members, limiting the biological insights from your study.

Diagnosis & Solutions:

| Step | Diagnosis Question | Tool/Metric to Check | Recommended Solution |

|---|---|---|---|

| 1. Check Data Input | Is my sequencing depth sufficient for rare species? | Raw read count; coverage distribution across contigs. | Increase sequencing depth to improve signal from low-abundance organisms [21]. |

| 2. Check Binner Choice | Is my binning tool suited for imbalanced natural samples? | Method description in tool literature. | Switch to a binner designed for imbalanced data, such as LorBin or SemiBin2 [19]. |

| 3. Check Output Quality | Are my bins for rare species fragmented or contaminated? | Completeness & contamination estimates (e.g., with CheckM). | Apply a two-stage or hybrid binning approach that reclusters uncertain bins to improve recovery [19] [15]. |

Problem: Bin Proliferation and Chimerism

Issue: You obtain an overabundance of bins, many of which are chimeric (containing contigs from multiple different species) or are incomplete fragments of the same genome.

Diagnosis & Solutions:

| Step | Diagnosis Question | Tool/Metric to Check | Recommended Solution |

|---|---|---|---|

| 1. Check Clustering | Is the tool splitting one genome into multiple bins? | CheckM; coverage and composition consistency within bins. | Use a binner with robust clustering (e.g., using DBSCAN) that is less sensitive to density variations caused by abundance imbalance [19]. |

| 2. Check Strain Disentanglement | Are my bins contaminated with closely related strains? | Single-nucleotide variant (SNV) heterogeneity within bins. | Employ tools that use advanced features like single-copy genes for a more reliable assessment of bin quality and purity [19]. |

Experimental Protocols for Robust Binning

Protocol: Benchmarking Binners on Imbalanced Datasets

Purpose: To select the most effective binning tool for a specific metagenomic dataset with a known or suspected imbalanced species distribution.

- Data Preparation: Use a benchmark dataset like CAMI II, which includes samples from various habitats with known ground truth genomes [19]. Alternatively, create a synthetic dataset by in silico sequencing a mix of genomes with pre-defined, highly uneven abundance ratios.

- Tool Selection: Select a suite of binners that represent different algorithmic approaches (e.g., LorBin, VAMB, MetaBAT2, SemiBin2) [19] [5] [21].

- Execution: Run each binner on the dataset using its recommended parameters and default settings for a fair comparison.

- Quality Assessment: Assess the output MAGs using a tool like CheckM to calculate completeness and contamination for each recovered genome [5].

- Performance Metric Calculation: For each binner, calculate:

- The number of high-quality (HQ) MAGs (e.g., >90% completeness, <5% contamination) recovered.

- The number of novel taxa identified (by comparing to a reference database).

- Precision and Recall against the known ground truth genomes.

The following workflow summarizes the benchmarking protocol:

Protocol: Implementing a Two-Stage Binning Strategy with LorBin

Purpose: To maximize the recovery of high-quality MAGs from both dominant and rare species in a complex sample. This protocol leverages LorBin's published architecture [19].

- Feature Extraction: Input assembled contigs. LorBin uses a self-supervised variational autoencoder (VAE) to extract embedded features from k-mer frequencies and contig abundance profiles.

- First-Stage Clustering: The embedded features are subjected to multiscale adaptive DBSCAN clustering. DBSCAN is effective at finding dense clusters of points (likely dominant species) while ignoring noise (which can include rare species).

- Iterative Assessment & Decision: The preliminary bins from DBSCAN are rigorously evaluated using a model based on single-copy genes. High-quality bins are sent directly to the final bin pool.

- Second-Stage Clustering: Contigs in low-quality bins and unclustered "noise" are subjected to multiscale adaptive BIRCH clustering. BIRCH is efficient for large datasets and can identify the weaker, more diffuse clusters formed by rare species.

- Final Bin Pool: Bins from both clustering stages are pooled together, resulting in a comprehensive set of MAGs that more fully represents the imbalanced community.

The logical workflow of this two-stage strategy is outlined below:

The Scientist's Toolkit: Key Research Reagent Solutions

Table: Essential computational tools and their functions for handling imbalanced binning.

| Tool/Framework | Type | Primary Function in Addressing Imbalance | Key Advantage |

|---|---|---|---|

| LorBin | Binning Tool | Two-stage multiscale clustering (DBSCAN & BIRCH) with a reclustering decision model [19]. | Specifically designed for imbalanced natural microbiomes; recovers more novel and high-quality MAGs [19]. |

| SemiBin2 | Binning Tool | Uses self-supervised contrastive learning and DBSCAN clustering [19]. | Effectively handles long-read data and improves binning in complex environments [19]. |

| MetaBAT 2 | Binning Tool | A hybrid binner that uses tetranucleotide frequency and coverage depth [5]. | A widely used, benchmarked tool known for accuracy and efficiency [5]. |

| CheckM | Quality Assessment | Assesses the quality (completeness/contamination) of genome bins [5]. | Uses lineage-specific marker genes to provide a reliable estimate of bin quality, crucial for validating bins from rare species [5]. |

| CAMI II Dataset | Benchmark Data | Provides simulated metagenomes from multiple habitats with known genome answers [19]. | Gold-standard for objectively testing and comparing binner performance on data with complex, realistic distributions [19]. |

Advanced Binning Algorithms and Strategic Workflows for Low-Abundance Recovery

Frequently Asked Questions (FAQs) on Metagenomic Binning

FAQ 1: What is the fundamental difference between composition-based and abundance-based binning, and why does it matter for low-abundance species?

Composition-based and abundance-based binning methods leverage different genomic properties to cluster sequences, each with distinct strengths and weaknesses, especially relevant for studying low-abundance species [20].

- Composition-Based Binning: This approach is based on the observation that different genomes have distinct sequence composition patterns, such as tetranucleotide (4-mer) frequency or GC content [5] [20]. The method assumes that sequences from the same genome will have similar composition signatures. However, it can struggle to distinguish between closely related genomes that share similar composition patterns and can perform poorly on short sequences from low-abundance species, where the genomic signature may not be statistically robust [20] [23].

- Abundance-Based Binning: This method groups sequences based on their coverage depth (the average number of reads mapping to a contig) [5] [20]. It operates on the principle that all sequences from the same organism should be present in similar proportions in a sample. This makes it powerful for separating closely related species with different abundance levels. However, its main limitation is that it cannot distinguish between different species that coincidentally have the same abundance level in a sample [15].

For low-abundance species research, abundance-based methods can fail because the coverage information for these species is often sparse and noisy. Therefore, hybrid methods, which combine both composition and abundance information, are generally recommended as they can compensate for the weaknesses of each approach when used alone [23].

FAQ 2: My binning tool produced a bin with high completeness but also high contamination. Should I refine this bin, and what are the potential trade-offs?

This is a common dilemma in bin refinement. While the goal is to obtain a genome bin with high completeness and low contamination, the refinement process involves trade-offs between genetic "correctness" and "gene richness" [24].

- When to Refine: It is generally good practice to refine bins with contamination greater than 5-10% [24]. Contamination often manifests in bin refinement interfaces (e.g., anvi'o) as contigs forming "divergent branches with unequal coverage," which are likely mis-binned sequences.

- The Trade-Off: Aggressively removing all contigs with divergent coverage can lead to the loss of accessory genes [24]. These genes might be rare, present on plasmids (which can have higher coverage), or only exist in a sub-population of the species (explaining their lower coverage). While a bin containing all these genes might not perfectly represent a single organism, a bin stripped of all auxiliary genes might be an oversimplification that misses ecologically or functionally important genetic elements.

- Recommendation: A balanced approach is needed. Prioritize removing contigs that clearly have taxonomic assignments different from the core bin. For contigs of uncertain origin, biological context (e.g., BLAST results for known phylum-specific genes) should guide the decision to keep or remove [24].

FAQ 3: Why do traditional binning tools like MetaBAT2 often perform poorly on long-read metagenomic assemblies, and what are the new solutions?

Long-read sequencing technologies (e.g., PacBio, Oxford Nanopore) produce data with greater contiguity, which helps assemble low-abundance genomes with fewer errors [7]. However, traditional binners like MetaBAT2 were designed for the properties of short-read assemblies and struggle with long-read data for several reasons. The inherent continuity and different error profiles of long-read assemblies make the feature extraction and clustering strategies of short-read binners suboptimal [7].

Newer tools are specifically designed to handle these challenges:

- LorBin: An unsupervised binner that uses a two-stage multiscale adaptive clustering (DBSCAN and BIRCH) with an evaluation decision model. It is particularly effective for natural microbiomes with imbalanced species distributions and for identifying novel taxa, often generating 15–189% more high-quality MAGs than state-of-the-art competitors on long-read data [7].

- SemiBin2: Extends the semi-supervised learning approach of SemiBin to long-read data by incorporating a DBSCAN clustering algorithm, improving its performance on long-read assemblies [25] [26].

FAQ 4: I am working with viral metagenomes (viromes), and standard binning tools are producing unconvincing results, often binning only one contig. How should I proceed?

Binning viral contigs is inherently challenging due to their high mutation rates and the lack of universal marker genes, which makes composition and coverage features less stable [27]. Standard binning tools frequently fail, resulting in bins containing only a single contig [27].

A more effective strategy for virome analysis often bypasses traditional binning altogether:

- Focus on Contig-Level Analysis: Instead of binning, set a length cutoff (e.g., 5 kbp) and assign taxonomy to individual contigs using tools like BLASTn.

- Identify Viral Sequences: Use dedicated viral identification tools such as CheckV and VirSorter2 to confidently identify and quality-check viral sequences in your contigs.

- Define Viral Operational Taxonomic Units (vOTUs): Manually curate or use tools like VContact2 to define vOTUs based on the contigs identified. It is not recommended to remove short contigs prior to analysis, as they may be informative fragments, and their abundance should correlate with their parent genome [27].

The Scientist's Toolkit: Binning Algorithms and Evaluation Metrics

Benchmarking of Modern Binning Tools

The performance of binning tools can vary significantly across different data types (short-read, long-read, hybrid) and binning modes (single-sample, multi-sample, co-assembly). The following table summarizes top-performing tools based on a recent comprehensive benchmark study [25].

Table 1: High-Performance Binners for Different Data-Binning Combinations (2025 Benchmark)

| Data-Binning Combination | Description | Top Three High-Performance Binners |

|---|---|---|

| Shortreadmulti | Short-read data, multi-sample binning | 1. COMEBin, 2. Binny, 3. MetaBinner |

| Shortreadsingle | Short-read data, single-sample binning | 1. COMEBin, 2. MetaDecoder, 3. SemiBin2 |

| Longreadmulti | Long-read data, multi-sample binning | 1. MetaBinner, 2. COMEBin, 3. SemiBin2 |

| Longreadsingle | Long-read data, single-sample binning | 1. MetaBinner, 2. SemiBin2, 3. MetaDecoder |

| Hybrid_multi | Hybrid (short+long) data, multi-sample binning | 1. COMEBin, 2. Binny, 3. MetaBinner |

| Hybrid_single | Hybrid (short+long) data, single-sample binning | 1. COMEBin, 2. MetaDecoder, 3. SemiBin2 |

| Short_co | Short-read, co-assembly binning | 1. Binny, 2. SemiBin2, 3. MetaBinner |

Table 2: Efficient Binners for General Use

| Tool Name | Description | Use Case |

|---|---|---|

| MetaBAT 2 | Uses tetranucleotide frequency and coverage to calculate pairwise contig similarity, clustered via a label propagation algorithm [5] [25]. | A robust, efficient, and widely-used standard for general binning tasks [25]. |

| VAMB | Utilizes a variational autoencoder (VAE) to integrate k-mer and abundance features into a latent representation for clustering [25] [26]. | An efficient deep-learning-based binner that scales well to large datasets [25]. |

| MetaDecoder | Employs a modified Dirichlet process Gaussian mixture model for initial clustering, followed by a semi-supervised probabilistic model [25] [26]. | An efficient and recently developed tool that performs well across various scenarios [25]. |

Key Metrics for Evaluating Binning Quality

After generating Metagenome-Assembled Genomes (MAGs), it is crucial to assess their quality using standardized metrics.

Table 3: Essential Metrics for MAG Quality Assessment

| Metric | Description | Ideal Value / Standard |

|---|---|---|

| Completeness | An estimate of the proportion of a single-copy core gene set present in the MAG, indicating how much of the genome has been recovered [23]. | >90% (High-quality), >50% (Medium-quality) |

| Contamination | An estimate of the proportion of single-copy core genes that are present in more than one copy in the MAG, indicating sequence from different organisms has been incorrectly included [23]. | <5% (High-quality), <10% (Medium-quality) |

| Purity | The homogeneity of a bin, often used interchangeably with (1 - contamination) [23]. | >0.95 |

| F1-Score (Completeness/Purity) | The harmonic mean of completeness and purity, providing a single score to evaluate the trade-off between them [23]. | Closer to 1.0 |

| Adjusted Rand Index (ARI) | A measure of the similarity between the binning result and the ground truth, correcting for chance [7]. | Closer to 1.0 |

Tools like CheckM or CheckM2 are commonly used to calculate completeness and contamination based on the presence of single-copy marker genes [5] [25].

Experimental Protocol: A Sample Binning and Evaluation Workflow

This protocol outlines a standard workflow for co-assembly binning and quality assessment, as implemented in tools like MetaBAT 2 and evaluated in benchmark studies [5] [23] [25].

Objective: To reconstruct high-quality MAGs from raw metagenomic sequencing reads.

Step 1: Data Preparation and Quality Control

- Obtain metagenomic sequencing reads in FASTQ format.

- Perform quality control and adapter trimming using tools like BBTools' bbduk.

- (Optional) Remove host-derived reads if working with a host-associated microbiome.

Step 2: Metagenomic Assembly

- Assemble the quality-filtered reads into longer sequences (contigs) using a metagenomic assembler. Common choices include:

- Filter out contigs shorter than a specified length (e.g., 1500 bp or 3000 bp is common) to improve binning accuracy [23] [27].

Step 3: Generate Coverage Profiles

- Map the sequencing reads from each sample back to the assembled contigs using a mapping tool like Bowtie2 or BWA to generate BAM files [5].

- Calculate the coverage depth (abundance) for each contig in each sample from the BAM files. This information is required by most binning tools.

Step 4: Metagenomic Binning

- Run one or more binning tools from the Toolkit (Section 2.1) using the contigs (FASTA) and coverage profiles as input. For example, to run MetaBAT 2:

- Command example:

metabat2 -i contigs.fa -a depth.txt -o bin -m 1500

- Command example:

Step 5: Binning Refinement (Optional but Recommended)

- Use a bin refinement tool like MetaWRAP, DAS Tool, or MAGScoT to combine the results of multiple binning tools [23] [25]. These tools can generate a superior set of MAGs by leveraging the strengths of different binners.

Step 6: Quality Assessment of MAGs

- Run CheckM or CheckM2 on the final set of bins (MAGs) to assess their completeness and contamination.

- Command example:

checkm lineage_wf bins_dir output_dir

- Command example:

- Classify bins as "high-quality," "medium-quality," or "incomplete" based on the standards in Table 3.

The following diagram visualizes this workflow:

Workflow for Metagenomic Binning and MAG Assessment

Research Reagent Solutions: Computational Tools for Binning

This table lists key software "reagents" essential for a metagenomic binning pipeline.

Table 4: Essential Computational Tools for a Binning Pipeline

| Tool / Resource | Function | Role in the Experimental Process |

|---|---|---|

| metaSPAdes / metaFlye | Metagenomic Assembler | Reconstructs longer contiguous sequences (contigs) from short-read or long-read sequencing data, respectively [20]. |

| Bowtie2 / BWA | Read Mapping | Aligns sequencing reads back to the assembled contigs to generate coverage (abundance) information [5]. |

| COMEBin / MetaBAT 2 | Core Binning Algorithm | The primary engine that clusters contigs into MAGs based on sequence composition and abundance features [25] [26]. |

| CheckM2 | Quality Assessment | Evaluates the completeness and contamination of the resulting MAGs using a set of conserved marker genes [25]. |

| MetaWRAP / DAS Tool | Binning Refiner | Integrates results from multiple binning tools to produce a superior, consolidated set of MAGs [23] [25]. |

| CAMI Benchmarking Tools | Method Evaluation | Provides standardized datasets and metrics (e.g., AMBER, OPAL) for the fair comparison of binning tools against a known gold standard [17]. |

Frequently Asked Questions (FAQs)

Q1: What is the core principle behind hybrid binning, and why is it particularly powerful for complex samples?

Hybrid binning is a computational strategy that sequentially combines two complementary approaches: binning based on species abundance and binning based on sequence composition and overlap [28]. Its power comes from leveraging the strengths of each method to mitigate the other's weaknesses. Abundance-based binning excels at distinguishing species with different abundance levels but struggles when species have similar abundance [29]. Overlap-based binning can separate species with similar abundance by using compositional features (like k-mer frequencies) but may perform less well when abundance levels vary greatly [28]. By combining them, hybrid binning achieves more accurate and robust clustering, which is crucial for complex samples containing species with a wide range of abundances and evolutionary backgrounds [28] [8].

Q2: My research focuses on low-abundance species. What are the specific advantages of using a hybrid approach like MetaComBin?

For low-abundance species research, hybrid binning offers significant advantages:

- Improved Sensitivity: It enhances the recovery of genomes from low-abundance organisms, which are often missed by methods that rely on a single type of signal [8] [11].

- Reduced Misclassification: The two-step process helps prevent low-abundance sequences from being incorrectly binned with genetically similar but high-abundance species, a common issue in composition-based methods [28].

- Refined Binning: The initial abundance-based step creates coarse clusters. The subsequent compositional/overlap step then refines these clusters, effectively separating distinct low-abundance species that might have been grouped together initially due to their similarly low coverage [28].

Q3: What are the typical inputs and outputs for a hybrid binning tool like MetaComBin?

Inputs:

- Sequencing Reads: The primary input is the raw or quality-filtered sequencing reads from the metagenomic sample (e.g., in FASTQ format) [28].

- No Reference Genomes Required: As an unsupervised method, MetaComBin does not require a database of reference genomes, making it suitable for discovering novel species [28].

Outputs:

- Binned Read Files: The main output is a set of files, each containing the sequencing reads assigned to a specific bin, which ideally corresponds to a single species or strain [28].

- Cluster Information: Data specifying which reads belong to which cluster, enabling downstream analysis like assembly and functional annotation of the binned genomes [28].

Troubleshooting Guides

Issue 1: Poor Binning Accuracy on Samples with Species of Similar Abundance

Problem: The binning results show low purity, meaning bins contain a mix of different species. This is suspected to occur when the sample contains multiple species with very similar abundance levels.

Diagnosis: This is a known limitation of abundance-based binning algorithms. If the abundance ratio between species is close to 1:1, the abundance signal becomes weak, and the first step of the hybrid pipeline may group them together [28] [29].

Solutions:

- Verify the Hybrid Workflow: Ensure that the second step (the overlap/composition-based binner, e.g., MetaProb) is correctly executed on the output of the first step (the abundance-based binner, e.g., AbundanceBin). The entire power of the hybrid approach relies on this sequential refinement [28].

- Check Input Parameters: For the overlap-based tool, confirm that parameters like k-mer size (

qin MetaProb, default 31) are appropriate for your read length and data complexity [28]. - Leverage Multi-Sample Binning: If you have multiple related samples from the same environment, use a multi-sample binning mode. A 2025 benchmark showed that multi-sample binning recovers significantly more high-quality genomes than single-sample binning across short-read, long-read, and hybrid data types [8].

Issue 2: High Computational Resource Demand

Problem: The hybrid binning process is taking a very long time or consuming excessive memory, making it infeasible on your hardware.

Diagnosis: Hybrid binning involves running multiple algorithms and can be computationally intensive, especially for large datasets with high sequencing depth or complexity [30] [8].

Solutions:

- Subsample Your Data: For initial testing and parameter optimization, run the pipeline on a randomly subsampled portion of your reads (e.g., 10-25%) to reduce computational load.

- Optimize Thread Usage: Most tools support multi-threading. Specify the number of available CPU cores using the

-tor--threadsparameter to speed up computation [30]. - Explore Efficient Binners: If resource constraints are severe, consider using stand-alone binners known for good scalability. A recent benchmark highlights MetaBAT 2, VAMB, and MetaDecoder as efficient options [8].

Issue 3: Difficulty Integrating with Downstream Analysis Pipelines

Problem: The output format of the hybrid binner is not directly compatible with standard tools for metagenome-assembled genome (MAG) refinement, quality assessment, or annotation.

Diagnosis: Different binning tools can have unique output formats. The binned reads need to be processed further to become usable MAGs.

Solutions:

- Assembly of Binned Reads: The binned reads are not assembled genomes. You must assemble them into contigs using a metagenomic assembler like metaSPAdes or MEGAHIT [31] [32].

- Use Standardized Quality Assessment: After assembly, assess the quality of your MAGs using established tools like CheckM2 to evaluate completeness and contamination [30] [8].

- Employ Bin Refinement Tools: To further improve bin quality, use refinement tools like MetaWRAP, DAS Tool, or MAGScoT. These tools can combine bins from multiple methods (including your hybrid output) to produce a superior, consolidated set of MAGs [30] [8].

Performance Data and Experimental Protocols

Quantitative Comparison of Binning Approaches

The table below summarizes key performance metrics from benchmarking studies, highlighting the advantage of multi-sample and advanced binning modes. "MQ" refers to "moderate or higher" quality MAGs (completeness >50%, contamination <10%) [8].

Table 1: Performance of Different Binning Modes on a Marine Dataset (30 Samples)

| Binning Mode | Data Type | MQ MAGs Recovered | Near-Complete MAGs Recovered | Key Advantage |

|---|---|---|---|---|

| Single-Sample | Short-Read | 550 | 104 | Faster; suitable for individual sample analysis |

| Multi-Sample | Short-Read | 1,101 (100% more) | 306 (194% more) | Dramatically higher yield and quality [8] |

| Single-Sample | Long-Read | 796 | 123 | Better for long contiguous sequences |

| Multi-Sample | Long-Read | 1,196 (50% more) | 191 (55% more) | Superior recovery from long-read data [8] |

Detailed Methodology for MetaComBin-style Hybrid Binning

Objective: To cluster metagenomic sequencing reads into bins representing individual species by combining abundance and overlap-based signals.

Workflow Overview:

Step-by-Step Protocol:

Input Data Preparation:

Execute Abundance-Based Binning (Step 1):

- Run a tool like AbundanceBin on the quality-controlled reads.

- Principle: This tool models the sequencing procedure as a mixture of Poisson distributions, where the mean of each distribution represents the abundance of a species. It uses an expectation-maximization (EM) algorithm to estimate these abundances and perform an initial clustering [29].

- Output: This step produces initial clusters where each cluster contains reads from species with identical or very similar abundance levels [28].

Execute Overlap-Based Binning (Step 2):

- For each coarse cluster generated in Step 1, run an overlap-based binning tool like MetaProb.

- Principle: MetaProb first groups reads based on their overlap (shared k-mers). It then extracts a normalized k-mer profile from representative reads in each group to create a "signature." Finally, it uses a clustering algorithm (like k-means) on these signatures to generate the final, refined bins [28].

- Key Parameter: The

-kparameter (number of clusters) can be estimated by MetaProb itself using a statistical test, which is crucial for real-world datasets where the number of species is unknown [28].

Output and Downstream Processing:

The Scientist's Toolkit: Essential Research Reagents & Solutions

Table 2: Key Software Tools and Resources for Hybrid Binning and MAG Recovery

| Tool / Resource | Category | Primary Function | Relevance to Hybrid Binning |

|---|---|---|---|

| AbundanceBin [29] | Abundance Binner | Groups reads based on coverage (abundance) levels. | Forms the first stage of the MetaComBin pipeline, creating initial coarse clusters [28]. |

| MetaProb [28] | Composition/Overlap Binner | Groups reads based on sequence composition and overlap. | Forms the second, refining stage of the MetaComBin pipeline [28]. |

| CheckM2 [8] | Quality Assessment | Estimates completeness and contamination of MAGs. | Essential for benchmarking and validating the quality of bins produced by any method. |

| MetaWRAP [30] [8] | Bin Refinement | Consolidates and refines bins from multiple binning tools. | Can be used to further improve the quality of bins generated by a hybrid approach. |

| metaSPAdes [32] [11] | Assembler | Assembles sequencing reads into longer contigs. | Used downstream to assemble the binned reads into MAGs. |

| Bowtie2 [31] [32] | Read Mapping | Maps reads to a reference genome. | Used for decontamination (removing host reads) and for generating coverage profiles for some binners. |

Frequently Asked Questions (FAQs) on Two-Round Binning

Q1: What specific problems does a two-round binning strategy solve that traditional one-round methods do not? Traditional unsupervised binning methods often fail in two common scenarios: (1) samples containing many extremely low-abundance species (≤5x coverage), which create noise that interferes with binning even higher-abundance species, and (2) samples containing low-abundance species (6x-10x coverage) that do not have sufficient coverage to be grouped confidently using a single, strict set of parameters [33]. A two-round strategy directly addresses this by first filtering out noise and then targeting the distinct groups with optimized parameters.

Q2: Why is a single fixed 'w' value (for w-mer grouping) insufficient, and how does MetaCluster 5.0 adapt?

The choice of the w-mer length involves a trade-off. A large w value reduces false positives (reads from different species mixing) but produces groups that are too small for low-abundance species due to insufficient coverage. A smaller w value creates larger groups that can include low-abundance reads but drastically increases false positives from noise [33]. MetaCluster 5.0 adapts by using multiple w values: a large w with high confidence for high-abundance species in the first round, and a relaxed (shorter) w to connect reads from low-abundance species in the second round [33] [34].

Q3: My binning results have high contamination. What is the most likely cause and how can I troubleshoot this? High contamination often results from the incorrect merging of groups from different species. This frequently occurs when the data contains many species with a continuous spectrum of abundances, causing their sequence composition signatures to blend [33].

- Troubleshooting Steps:

- Verify Abundance Distribution: Check the abundance distribution of your contigs or reads. A smooth, continuous spectrum is more challenging for binning.

- Re-evaluate Filtering: Ensure your initial filtering step to remove reads from extremely low-abundance species (e.g., ≤5x) is functioning correctly. Overly relaxed filtering will allow noise to persist.

- Inspect Parameter Sensitivity: If using a modern tool, try adjusting clustering sensitivity parameters. For instance, tools like LorBin use an adaptive DBSCAN that can be fine-tuned to improve cluster separation and purity [7].

Q4: Are two-round binning strategies relevant for long-read metagenomic data? Yes, the core principle is not only relevant but has been adapted and extended in advanced binners for long-read data. While the specific implementation may differ from MetaCluster 5.0, modern tools like LorBin deploy sophisticated multi-stage clustering (e.g., DBSCAN followed by BIRCH) with iterative assessment and reclustering decisions to handle the challenges of long-read assemblies and imbalanced species distributions [7]. This demonstrates the enduring value of the sequential filtering and clustering concept.

Q5: Beyond improving bin quality, what is the practical value of recovering low-abundance genomes? Recovering low-abundance genomes is critical for comprehensive biological insight. These genomes can be highly significant in disease contexts. For example, in colorectal cancer studies, researchers found that low-abundance genomes were more important than dominant ones for accurately classifying disease and healthy metagenomes, achieving over 0.90 AUROC (Area Under the Receiver Operating Characteristic curve) [4]. This highlights that key functional roles can reside within the "rare biosphere."

Performance Data and Experimental Protocols

Performance Comparison of Binning Strategies

The following table summarizes quantitative performance data, illustrating the effectiveness of two-round and other advanced binning strategies compared to earlier tools.

Table 1: Benchmarking Performance of Metagenomic Binning Tools

| Tool / Strategy | Data Type | Key Advantage | Reported Performance Gain | Reference / Use-Case |

|---|---|---|---|---|

| MetaCluster 5.0 (Two-round) | Short-read NGS | Identifies low-abundance (6x-10x) species in noisy samples. | Identified 3 low-abundance species missed by MetaCluster 4.0; 92% precision, 87% sensitivity. | [33] |

| COMEBin (Contrastive Learning) | Short/Long/Hybrid | Robust embedding generation via data augmentation. | Ranked 1st in 4 out of 7 data-binning combinations in benchmark. | [8] |

| Multi-sample Binning (Mode) | Short-read | Leverages co-abundance across samples. | Recovered 100% more MQ MAGs and 194% more NC MAGs than single-sample binning on marine data. | [8] |

| LorBin (Multi-stage for long-read) | Long-read | Handles imbalanced species distribution and unknown taxa. | Generated 15–189% more high-quality MAGs than state-of-the-art binners. | [7] |

| metaSPAdes + MetaBAT2 (Assembly-Binner Combo) | Short-read | Effective for low-abundance species recovery. | Highly effective combination for recovering low-abundance species (<1%). | [11] |

Key Experimental Protocol: Co-assembly and Binning for Disease Association Studies

The following workflow, derived from a colorectal cancer study [4], details a protocol for recovering low-abundance and uncultivated species from multiple metagenomic samples.

Objective: To recover Metagenome-Assembled Genomes (MAGs), including low-abundance and uncultivated species, for association with a phenotype (e.g., disease).

Workflow Description: The process starts with the collection of metagenomic samples from different cohorts. All reads from samples within a cohort are combined and assembled together in a de novo co-assembly to increase sequencing depth. The resulting scaffolds are then binned to form draft MAGs. These MAGs are assessed for quality, and only those meeting medium-quality thresholds are retained. The quality-filtered MAGs are then taxonomically annotated, which helps identify potential uncultivated species. Finally, the abundance of each MAG is profiled across all individual samples, and this abundance matrix is used for downstream statistical analysis to associate specific MAGs with the phenotype of interest.

Conceptual Diagram of Two-Round Adaptive Clustering

This diagram visualizes the core logic of a two-stage or multi-stage clustering approach as used in modern binners like LorBin [7], which shares the philosophical principle of iterative refinement with MetaCluster 5.0.

Diagram Description: Embedded feature data is first processed by an adaptive DBSCAN clustering algorithm. An iterative assessment model then evaluates the resulting clusters. High-quality clusters are sent directly to the final bin pool. Low-quality clusters and unclustered data are forwarded for a second stage of clustering, which uses a different algorithm (BIRCH). The results of this second stage are also assessed and assessed, and the high-quality outputs are added to the final bin pool, ensuring maximum recovery of quality genomes.

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 2: Key Software Tools and Algorithms for Advanced Metagenomic Binning

| Tool / Algorithm | Category / Function | Brief Description of Role |

|---|---|---|

| MetaCluster 5.0 | Two-round Binner | Reference implementation of a two-round strategy for short reads, using w-mer filtering and separate grouping for high/low-abundance species [33]. |

| MetaBAT 2 | Coverage + Composition Binner | Uses tetranucleotide frequency and coverage for binning via an Expectation-Maximization algorithm. Often used in effective assembly-binner combinations [8] [11]. |

| COMEBin | Deep Learning Binner | Applies contrastive learning to create robust contig embeddings, leading to high-performance clustering across multiple data types [8]. |

| VAMB | Deep Learning Binner | Uses a Variational Autoencoder (VAE) to integrate sequence composition and coverage before clustering. A key benchmark tool [8] [7]. |

| LorBin | Long-read Binner | Employs a self-supervised VAE and two-stage multiscale clustering (DBSCAN & BIRCH) for long-read data, ideal for imbalanced samples [7]. |

| MetaWRAP | Bin Refinement Tool | Combines bins from multiple tools to produce higher-quality consensus MAGs, often improving overall results [8]. |

| CheckM 2 | Quality Assessment | Standard tool for assessing MAG quality by estimating completeness and contamination using single-copy marker genes [8]. |

| GTDB-Tk | Taxonomic Classification | Assigns taxonomic labels to MAGs based on the Genome Taxonomy Database, crucial for identifying novel/uncultivated species [4]. |

Metagenome-assembled genomes (MAGs) have revolutionized our understanding of microbial communities, enabling researchers to study uncultured microorganisms directly from their natural environments. However, the recovery of high-quality genomes, particularly for low-abundance species (<1% relative abundance) and distinct strains, remains a significant challenge in metagenomic research. The selection of computational tools—specifically the combination of metagenomic assemblers and genome binning tools—profoundly impacts the quality, completeness, and biological relevance of recovered genomes [11] [35].

Research has demonstrated that different assembler-binner combinations excel at distinct biological objectives, making tool selection a critical consideration in experimental design [11]. A recent comprehensive evaluation revealed that the metaSPAdes-MetaBAT2 combination is highly effective for recovering low-abundance species, while MEGAHIT-MetaBAT2 excels at strain-resolved genomes [11] [35]. This technical support guide provides evidence-based recommendations for selecting optimal tool combinations, troubleshooting common issues, and implementing robust protocols for genome-resolved metagenomics focused on low-abundance species research.

FAQ: Assembler and Binner Selection Strategy

Q1: Why does the choice of assembler-binner combination matter for studying low-abundance species?

Low-abundance species present particular challenges in metagenomic analysis due to their limited sequence coverage and increased potential for assembly artifacts. Different computational tools employ distinct algorithms and have varying sensitivities for detecting rare sequences amidst dominant populations [11] [35]. The combinatorial effect of assemblers and binners significantly influences recovery rates, with studies showing dramatic variations in the number and quality of MAGs recovered from identical datasets [11]. Proper tool selection ensures that valuable biological information about these rare but potentially functionally important community members is not lost.

Q2: What are the key differences between the leading assemblers for metagenomics?

The three most widely used assemblers—metaSPAdes, MEGAHIT, and IDBA-UD—each have distinct strengths and trade-offs:

metaSPAdes generally produces more contiguous assemblies with higher accuracy but requires substantial computational resources [35]. It demonstrates particular effectiveness for recovering genomic context from complex communities.

MEGAHIT prioritizes computational efficiency, making it suitable for resource-limited settings or very large datasets, though this can come at the cost of increased misassemblies and reduced contiguity compared to metaSPAdes [35].

IDBA-UD performs well with uneven sequencing depth, which can be advantageous for communities with extreme abundance variations [35].

Q3: Which binning approaches show the best performance for complex microbial communities?

Modern binning tools employ different algorithmic strategies, with hybrid methods that combine sequence composition and coverage information generally outperforming single-feature approaches [5] [8]. Performance varies significantly across datasets, but recent benchmarks indicate that:

MetaBAT 2 uses tetranucleotide frequency and coverage to calculate pairwise contig similarities, then applies a modified label propagation algorithm for clustering [8].

MaxBin 2.0 employs an Expectation-Maximization algorithm that uses tetranucleotide frequencies and coverages to estimate the likelihood of contigs belonging to particular bins [8].

CONCOCT integrates sequence composition and coverage, performs dimensionality reduction using PCA, and applies Gaussian mixture models for clustering [8].

Ensemble methods like MetaBinner and BASALT leverage multiple binning strategies or refine outputs from several tools, often producing superior results by combining their complementary strengths [36] [16].

Q4: How does multi-sample binning compare to single-sample approaches?

Multi-sample binning (using coverage information across multiple metagenomes) significantly outperforms single-sample binning across various data types. Benchmark studies demonstrate that multi-sample binning recovers 125% more moderate-or-higher quality MAGs from marine short-read data, 54% more from long-read data, and 61% more from hybrid data compared to single-sample approaches [8]. This method is particularly valuable for identifying potential antibiotic resistance gene hosts and discovering near-complete strains containing biosynthetic gene clusters [8].

Performance Comparison Tables

Table 1: Performance of Assembler-Binner Combinations for Specific Research Goals

| Research Objective | Recommended Combination | Key Performance Findings | Considerations |

|---|---|---|---|

| Recovery of low-abundance species (<1%) | metaSPAdes + MetaBAT2 | Highly effective for low-abundance taxa; recovers more usable quality genomes from rare community members [11] | Computationally intensive; requires substantial memory resources |

| Strain-resolved genomes | MEGAHIT + MetaBAT2 | Excels at distinguishing closely related strains; maintains good resolution of strain variation [11] [35] | Balance between efficiency and assembly quality |

| General-purpose binning | metaSPAdes + COMEBin | Ranks first in multiple data-binning combinations in recent benchmarks [8] | Emerging method with limited community adoption currently |

| Large-scale or resource-limited projects | MEGAHIT + MaxBin2 | Computationally efficient option for screening large datasets [35] | Potential trade-off in genome completeness and contamination rates |

Table 2: Benchmarking Results of Top Binning Tools Across Data Types (2025 Benchmark)

| Binning Tool | Short-Read Multi-Sample | Long-Read Multi-Sample | Hybrid Data | Key Strengths |

|---|---|---|---|---|

| COMEBin | Ranking: 1st | Ranking: 1st | Ranking: 1st | Uses contrastive learning; excellent across data types [8] |

| MetaBinner | Ranking: 2nd | Ranking: 2nd | Ranking: 2nd | Stand-alone ensemble method; multiple feature types [8] [36] |

| Binny | Ranking: 1st (co-assembly) | Not top-ranked | Not top-ranked | Excels in short-read co-assembly scenarios [8] |

| MetaBAT 2 | Efficient binner | Efficient binner | Efficient binner | Balanced performance and computational efficiency [8] |

Experimental Protocols

Protocol: Optimized Workflow for Low-Abundance Species Recovery

Principle: This protocol leverages the metaSPAdes-MetaBAT2 combination specifically optimized for recovering low-abundance taxa from metagenomic samples [11] [35].

Step-by-Step Methodology:

Sample Preparation and Sequencing:

- Extract high-molecular-weight DNA using kits optimized for microbial communities

- Prepare Illumina short-read libraries with appropriate insert sizes

- Sequence to sufficient depth (recommended: 10-20 Gb per sample for complex communities)

Quality Control and Preprocessing:

- Assess read quality with FastQC (v0.12.1 or higher)

- Preprocess reads with fastp (v0.23.2) using parameters:

- Remove low-quality bases (Q < 20)

- Remove adaptor sequences

- Remove duplicate reads (parameters:

--detect_adapter_for_peand--dedup) [35]

Metagenome Assembly:

- Assemble high-quality reads using metaSPAdes (v3.15.3 or higher)

- Use default parameters with minimum contig length of 2000 bp

- Execute assembly on a high-memory compute node (recommended: 256+ GB RAM for complex communities)

Binning with MetaBAT 2:

- Generate coverage information by mapping reads to contigs using Bowtie2 or BWA

- Run MetaBAT 2 (v1.7 or higher) with default parameters

- Use the

metaSPAdes_metabat.shscript for automated workflow execution

Quality Assessment:

- Evaluate MAG quality using CheckM (v1.0.18) or CheckM2 with lineage workflow (

lineage_wf) - Classify MAGs according to MIMAG standards:

- High-quality: >90% completeness, <5% contamination

- Medium-quality: ≥50% completeness, <10% contamination [35]