Metagenomic Binning in 2025: A Comprehensive Guide to Tools, Methods, and Clinical Applications

This article provides a timely and comprehensive analysis of the current landscape of metagenomic binning tools and computational methods.

Metagenomic Binning in 2025: A Comprehensive Guide to Tools, Methods, and Clinical Applications

Abstract

This article provides a timely and comprehensive analysis of the current landscape of metagenomic binning tools and computational methods. Tailored for researchers and drug development professionals, it explores the foundational principles of binning, from core concepts and key genomic features to the impact of sequencing technologies. It delivers a detailed methodological review of state-of-the-art algorithms, including deep learning and unsupervised clustering, and offers practical guidance for troubleshooting and optimizing pipelines for real-world datasets. Finally, the article presents a rigorous comparative analysis based on recent benchmarking studies, validating tool performance across various data types and binning modes to empower scientists in selecting the most effective strategies for their biomedical research.

The Foundations of Metagenomic Binning: Core Concepts and Sequencing Data Types

Defining Metagenomic Binning and Metagenome-Assembled Genomes (MAGs)

Metagenomic binning is the foundational computational process in microbial ecology that groups assembled contiguous genomic sequences (contigs) from a metagenomic sample and assigns them to the specific genomes of their origin [1]. This technique is essential because metagenomic samples are environmental in origin and typically consist of sequencing data from many unrelated organisms; for example, a single gram of soil can contain up to 18,000 different types of organisms, each with its own distinct genome [1]. Binning occurs after metagenomic assembly and represents the effort to associate fragmented contigs back with a genome of origin, resulting in a Metagenome-Assembled Genome (MAG) [1]. A MAG is a species-level microbial genome reconstructed entirely from complex microbial communities without the need for laboratory cultivation [2] [3].

The advent of MAGs has revolutionized microbial ecology by enabling the genome-resolved study of the vast majority of microorganisms that cannot be cultured under standard laboratory conditions—a limitation that previously restricted our understanding of more than 90% of microbial diversity [3]. MAGs have successfully been used to identify novel species and study remote or complex environments such as soil, water, or the human gut, thereby significantly extending the known tree of life [1] [4]. For instance, one approach on globally available metagenomes binned 52,515 individual microbial genomes and extended the diversity of bacteria and archaea by 44% [1]. The transition from traditional marker gene surveys (like 16S rRNA) to whole-genome recovery via MAGs has provided unprecedented access to the functional potential and ecological roles of uncultivated microorganisms [3].

Methodological Approaches to Binning

Binning methods exploit the fact that different genomes have distinct sequence composition patterns and can exhibit varying coverage depths across multiple samples [1] [5]. These methods can be broadly categorized based on their underlying algorithms and learning approaches.

Table 1: Fundamental Binning Methodologies

| Method Category | Underlying Principle | Key Tools (Examples) | Advantages | Limitations |

|---|---|---|---|---|

| Composition-Based | Clusters contigs based on intrinsic genomic signatures like GC-content, codon usage, or tetranucleotide frequencies [1] [6]. | TETRA, Phylopythia, PCAHIER [1] | Effective at distinguishing genomes from different taxonomic groups. | Can struggle with closely related species or horizontally transferred genes [1]. |

| Coverage-Based | Groups contigs based on their abundance (read coverage) across multiple samples [5] [6]. | MaxBin, AbundanceBin [7] [8] | Can distinguish between species with similar DNA composition but different abundance levels. | Requires multiple samples to generate coverage profiles; struggles with species of similar abundance [8]. |

| Hybrid Methods | Integrates both compositional features and coverage profiles to improve accuracy [5] [6]. | MetaBAT 2, CONCOCT, SPHINX [1] [7] | Leverages multiple data sources, generally leading to higher binning accuracy. | Computationally more intensive than single-feature methods. |

| Supervised Binning | Uses known reference sequences and taxonomic labels to train classification models [1] [9]. | MEGAN, Phylopythia, SOrt-ITEMS [1] | High accuracy for classifying sequences from known taxa. | Dependent on database completeness; fails on novel organisms [9] [8]. |

| Unsupervised Binning | Clusters sequences without prior knowledge, based on intrinsic information [9] [8]. | CONCOCT, VAMB, MetaProb [7] [8] | Can discover novel species not present in any database. | No external labels to guide or validate the clustering process. |

| Semi-Supervised Binning | Combines limited labeled data with large sets of unlabeled data for learning [7] [9]. | SemiBin, CLMB [7] [9] | Improves learning where labeling is expensive or limited. | Complexity in algorithm design and training. |

Furthermore, modern approaches increasingly leverage machine learning and neural networks. A 2025 review identified 34 artificial neural network (ANN)-based binning tools, noting that deep learning approaches, such as convolutional neural networks (CNNs) and autoencoders, achieve higher accuracy and scalability than traditional methods [9]. Examples include VAMB, which uses a variational autoencoder, and SemiBin, which employs a semi-supervised deep siamese neural network [7].

Benchmarking Binning Tools and Workflows

Performance Across Data and Binning Modes

A comprehensive 2025 benchmark study evaluated 13 metagenomic binning tools using short-read, long-read, and hybrid data under three primary binning modes [7]:

- Co-assembly binning: All sequencing samples are assembled together, and the resulting contigs are binned with coverage information calculated across samples.

- Single-sample binning: Each sample is assembled and binned independently.

- Multi-sample binning: Samples are binned jointly, calculating coverage information across all samples.

The benchmark demonstrated that multi-sample binning generally exhibits optimal performance, substantially outperforming single-sample binning, particularly as the number of samples increases [7]. For instance, on a marine dataset with 30 metagenomic next-generation sequencing (mNGS) samples, multi-sample binning recovered 100% more moderate-quality MAGs, 194% more near-complete MAGs, and 82% more high-quality MAGs compared to single-sample binning [7]. The study also identified top-performing binners for various data-type and binning-mode combinations.

Table 2: High-Performance Binners for Different Data-Binning Combinations (Adapted from [7])

| Data-Binning Combination | Description | Top-Performing Binners |

|---|---|---|

| short_sin | Short-read data, single-sample binning | COMEBin, MetaBinner, SemiBin 2 |

| short_mul | Short-read data, multi-sample binning | COMEBin, VAMB, MetaBinner |

| short_co | Short-read data, co-assembly binning | Binny, COMEBin, MetaBinner |

| long_sin | Long-read data, single-sample binning | COMEBin, SemiBin 2, MetaBinner |

| long_mul | Long-read data, multi-sample binning | COMEBin, MetaBinner, SemiBin 2 |

| long_co | Long-read data, co-assembly binning | COMEBin, MetaBinner, MetaBAT 2 |

| hybrid_sin | Hybrid data, single-sample binning | COMEBin, MetaBinner, SemiBin 2 |

Impact of Sequencing Technology

The choice of sequencing technology profoundly impacts MAG quality. While Illumina short-read sequencing has been widely used for its cost-effectiveness and scalability, its short reads often result in fragmented assemblies, making binning challenging for complex communities [4] [6].

Long-read sequencing, particularly PacBio HiFi reads, provides major advantages [4]. HiFi reads are typically up to 25 kb long with 99.9% accuracy, making it possible to generate single-contig, complete MAGs because the reads are long enough to span repetitive regions and often entire microbial genomes [4]. Studies have consistently shown that HiFi sequencing produces more total MAGs and higher-quality MAGs than both short-read and other long-read technologies [4]. A 2024 preprint on the human gut microbiome found that using HiFi sequencing, improved metagenome assembly methods, and complementary binning strategies was "highly effective for rapidly cataloging microbial genomes in complex microbiomes" [4].

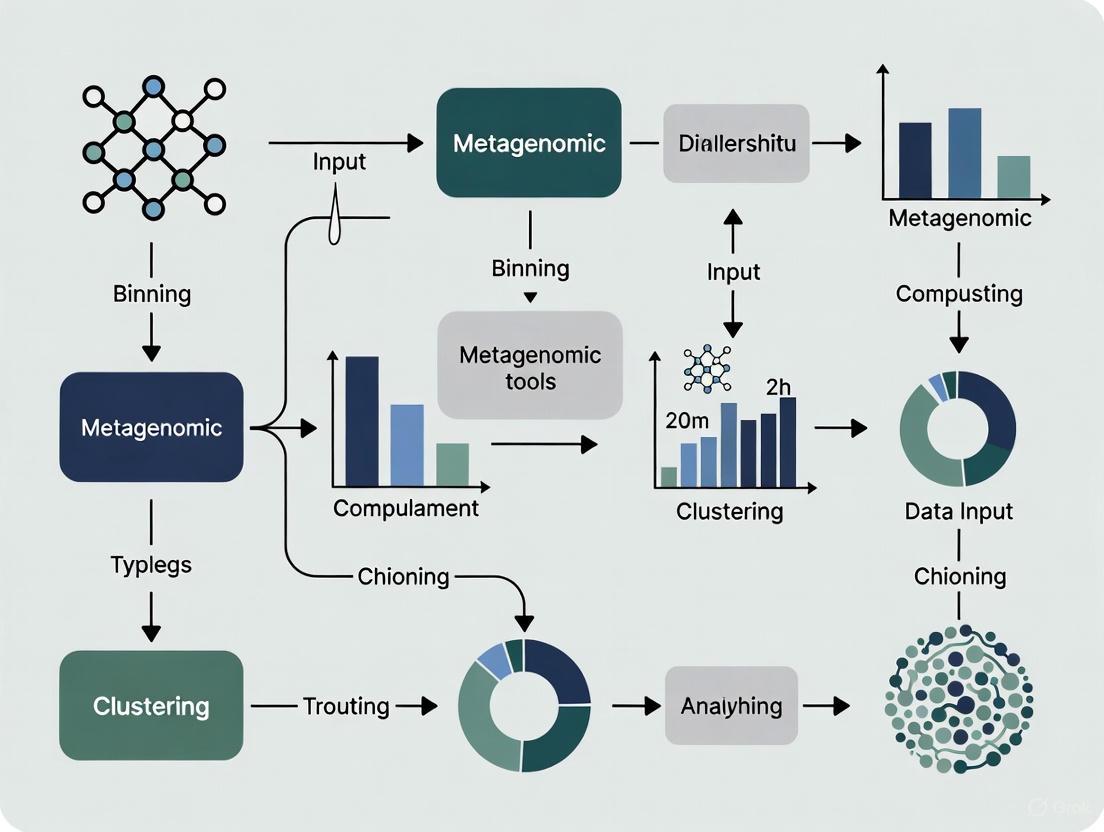

Diagram 1: MAG Reconstruction Workflow. The process flows from sample collection through DNA sequencing, assembly, binning, and finally quality assessment and analysis [2] [4] [6].

Experimental Protocols for MAG Generation and Validation

Standard Protocol for MAG Reconstruction

This protocol outlines the key steps for reconstructing MAGs from metagenomic sequencing data, integrating best practices from recent literature and benchmarks [7] [5] [6].

Step 1: Input Preparation

- Assembled Contigs (FASTA file): Generate contigs from raw metagenomic reads using an assembler such as MEGAHIT (for short-reads) or Flye (for long-reads) [6].

- Read Coverage Information (BAM file): Map the raw sequencing reads back to the assembled contigs using mapping software like Bowtie2 or BWA to generate coverage profiles [5].

Step 2: Binning Execution

- Select an appropriate binning tool based on your data type and binning mode (see Table 2). For a general-purpose, high-performance start, consider COMEBin or MetaBAT 2 [7].

- Example MetaBAT 2 Command:

The

-m 1500parameter sets the minimum contig length to 1500 bp, which is recommended to reduce noise [5].

Step 3: Binning Refinement (Optional but Recommended)

- Use a bin refinement tool like MetaWRAP or MAGScoT to combine the results of multiple binners. This ensemble approach often yields higher-quality MAGs than any single binner [7]. This command refines bins, setting thresholds of 50% for completeness and 10% for contamination [7].

Step 4: Quality Assessment

- Assess the quality of the resulting MAGs using CheckM or CheckM2 [7] [6]. These tools estimate completeness and contamination by searching for a set of single-copy marker genes that are expected to be present in a single copy in all bacterial and archaeal genomes.

- Classify MAGs according to established standards [2] [7]:

- Near-complete (NC): >90% complete, <5% contaminated.

- High-quality (HQ): >90% complete, <5% contaminated, and contains 5S, 16S, 23S rRNA genes, and at least 18 tRNAs.

- Medium-quality (MQ): >50% complete, <10% contaminated.

Protocol for Validating MAG Biological Reality

A critical challenge is confirming that a MAG, especially one from a novel species (a Hypothetical MAG or HMAG), represents a biologically real genome and not a computational artifact [2].

- Validation via Alignment (for SMAGs): If a reference genome from an isolate exists, a MAG can be validated as a Species-assigned MAG (SMAG) by demonstrating high Average Nucleotide Identity (ANI) (>97%) and high coverage (>90%) when aligned against the reference genome [2].

- Validation via Conservation (for HMAGs): For novel HMAGs, search for significant hits in large, independent MAG catalogs (e.g., the GEM catalog or human gut MAG catalogs). Finding a conserved hypothetical MAG (CHMAG) in an independent sample provides strong supporting evidence for its biological reality [2].

- Phylogenetic Placement: Use tools like GTDB-Tk to place the MAG into a reference phylogenetic tree. Consistent and robust placement adds confidence to the taxonomic and evolutionary interpretation of the MAG [1] [2].

The Scientist's Toolkit: Essential Research Reagents and Solutions

Table 3: Key Research Reagent Solutions for MAG Studies

| Item Name | Function/Application | Example Use-Case & Notes |

|---|---|---|

| Nucleic Acid Preservation Buffers | Stabilize microbial community DNA/RNA at the point of collection. | Use RNAlater or OMNIgene.GUT for fecal or gut content sampling when immediate freezing at -80°C is not feasible [3]. |

| High-Molecular-Weight DNA Extraction Kits | Extract long, unfragmented DNA strands crucial for long-read assembly. | Essential for PacBio HiFi or Nanopore sequencing to generate contiguous assemblies and high-quality MAGs [4] [3]. |

| PacBio HiFi Reads | Generate long, highly accurate sequencing reads for metagenome assembly. | Enables reconstruction of single-contig, complete MAGs, overcoming the fragmentation issues of short-read data [4]. |

| CheckM/CheckM2 Software | Assess MAG quality by estimating completeness and contamination. | A standard tool for benchmarking MAGs against established quality tiers (e.g., MQ, NC, HQ) [2] [7] [6]. |

| MetaWRAP Bin Refinement Module | Combine and refine bins from multiple binners to produce superior MAGs. | An ensemble approach that consistently recovers higher-quality MAGs than individual binners alone [7] [5]. |

| GTDB-Tk (Genome Taxonomy Database Toolkit) | Provide standardized taxonomic classification of MAGs. | Places MAGs into a consistent, genome-based taxonomy, crucial for comparative genomics and ecological interpretation [1] [2]. |

Metagenomic binning and the resulting MAGs have fundamentally transformed our ability to explore and understand the microbial world. By moving beyond the limitations of cultivation, researchers can now access the genomic blueprints of countless previously unknown organisms, dramatically expanding the tree of life and providing new insights into biogeochemical cycles, host-microbe interactions, and industrial processes. The field continues to advance rapidly, driven by improvements in long-read sequencing technologies, the development of more sophisticated machine learning-based binning algorithms, and the establishment of standardized validation protocols. As these methodologies mature, MAGs will undoubtedly remain a cornerstone of microbial ecology, environmental science, and biomedical research, unlocking further secrets of the planet's immense microbial dark matter.

Metagenomic binning is a crucial computational step in microbiome research that groups assembled DNA sequences (contigs) into metagenome-assembled genomes (MAGs) representing individual microbial populations [7]. This process enables researchers to study unculturable microorganisms and understand microbial community structure and function. Among the various approaches, methods leveraging k-mer frequencies and coverage profiles have proven particularly effective [5]. K-mer frequencies capture species-specific compositional signatures, while coverage profiles reflect abundance information across samples [10]. The integration of these heterogeneous features enables more accurate genome recovery, supporting diverse applications from antibiotic resistance tracking to natural product discovery [7].

This article examines the fundamental principles, computational methodologies, and practical applications of k-mer frequency and coverage profile analysis in metagenomic binning, providing both theoretical background and actionable protocols for research scientists and bioinformaticians.

Theoretical Foundations

k-mer Frequency Composition

A k-mer is a substring of length k from a biological sequence. For a DNA sequence of length L, there are L - k + 1 possible overlapping k-mers [11]. These k-mers serve as genomic signatures because their frequency distributions are remarkably consistent throughout a genome but vary between different genomes due to evolutionary pressures and molecular constraints.

The biological forces affecting k-mer frequency operate at multiple levels [11]:

- GC-content (k=1): Variation in single-nucleotide composition is influenced by mechanisms like GC-biased gene conversion, which preferentially replaces AT base pairs with GC base pairs during recombination.

- Dinucleotide bias (k=2): Suppression of CG dinucleotides due to methylation-mediated deamination creates distinctive patterns that are relatively constant throughout a genome and can serve as phylogenetic markers.

- Codon usage bias (k=3): In coding regions, translational selection favors codons matching abundant tRNAs, creating species-specific triplet patterns.

- Tetranucleotide frequency (k=4): These patterns are hypothesized to maintain genetic stability and show strong phylogenetic conservation, making them particularly valuable for binning [5].

For binning applications, tetranucleotide frequencies (k=4) are most commonly employed due to their high phylogenetic signal, though some tools utilize multiple k-mer sizes or adaptive approaches [7].

Coverage Profiles

Coverage refers to the number of sequencing reads mapping to a contig, reflecting the relative abundance of that genomic segment in the sample [12]. In multi-sample binning, coverage profiles capture abundance patterns across multiple metagenomic samples, providing a powerful co-abundance signal for grouping contigs from the same genome [13].

The underlying principle is that contigs from the same genome will demonstrate similar coverage patterns across multiple samples, as their abundance fluctuates consistently under different environmental conditions or across different hosts [7]. This co-abundance signal is particularly effective for distinguishing between genomes with similar k-mer frequencies [5].

Feature Integration Strategies

Effectively integrating k-mer frequency and coverage profile data remains challenging due to the heterogeneous nature of these features. Current binning tools employ various strategies [10]:

- Feature concatenation: Directly combining k-mer and coverage vectors (e.g., CONCOCT)

- Probabilistic multiplication: Multiplying probabilities derived from each feature type (e.g., MaxBin2)

- Weighted distance metrics: Combining similarity measures from both features (e.g., MetaBAT2)

- Deep learning integration: Using neural networks to learn joint representations (e.g., COMEBin, VAMB)

Recent advances in contrastive learning and multi-view representation learning have demonstrated particularly effective integration, significantly improving binning performance on complex real datasets [10].

Experimental Protocols

Coverage Profile Generation

Read Mapping-Based Coverage Calculation

This traditional approach provides precise coverage estimates but requires significant computational resources.

Materials:

- Assembled contigs (FASTA format)

- Raw sequencing reads from multiple samples (FASTQ format)

- Mapping tools: BWA (for short reads) or minimap2 (for long reads)

- Alignment processing tools: SAMtools, CoverM

Protocol:

Create mapping index

Map reads from each sample

Sort and index BAM files

Calculate coverage profiles

Alignment-Free Coverage Estimation with Fairy

For large-scale studies, the Fairy tool provides a k-mer-based approximation that dramatically reduces computation time while maintaining accuracy [13].

Materials:

- Assembled contigs (FASTA format)

- Raw sequencing reads from multiple samples (FASTQ format)

- Fairy software (https://github.com/bluenote-1577/fairy)

Protocol:

Build Fairy indices for each sample

Compute coverage profiles

The output format is compatible with major binners including MetaBAT2, MaxBin2, and SemiBin2 [13].

k-mer Frequency Calculation

Materials:

- Assembled contigs (FASTA format)

- Bioinformatics tools: Jellyfish, DSK, or integrated functions within binning tools

Protocol:

Count k-mers across all contigs

Generate k-mer frequency matrices

Most binning tools automatically calculate k-mer frequencies from contig sequences, making manual computation optional [5].

Binning Execution

Materials:

- Coverage profiles (from Protocol 3.1.1 or 3.1.2)

- Assembled contigs (FASTA format)

- Binning software: COMEBin, MetaBAT2, VAMB, or SemiBin2

Protocol:

Contig filtering: Remove contigs shorter than 1,500-2,500 bp to reduce noise [12]

Execute binning

Workflow Visualization

The following diagram illustrates the integrated computational workflow for metagenomic binning using k-mer frequencies and coverage profiles:

Figure 1: Metagenomic binning workflow integrating k-mer and coverage features.

Performance Benchmarking

Recent comprehensive evaluations of 13 binning tools across multiple sequencing platforms and binning modes provide quantitative performance data [7].

Table 1: Top-performing binners across data-binning combinations

| Data-Binning Combination | Top Performing Tools | Key Advantages |

|---|---|---|

| Short-read co-assembly | Binny, COMEBin, MetaBinner | Optimized for co-abundance signals in complex communities |

| Short-read multi-sample | COMEBin, VAMB, MetaBAT 2 | Superior MAG recovery using cross-sample coverage patterns |

| Long-read single-sample | SemiBin2, COMEBin, MetaDecoder | Effective handling of long-read error profiles |

| Long-read multi-sample | COMEBin, MetaBinner, VAMB | Leverages long-range information with abundance patterns |

| Hybrid data multi-sample | COMEBin, MetaBinner, VAMB | Integrates short-read accuracy with long-range connectivity |

Table 2: Quantitative recovery of near-complete MAGs (>90% completeness, <5% contamination) in marine dataset (30 samples) [7]

| Binning Mode | Short-Read Data | Long-Read Data | Hybrid Data |

|---|---|---|---|

| Single-sample | 104 MAGs | 123 MAGs | 118 MAGs |

| Multi-sample | 306 MAGs | 191 MAGs | 149 MAGs |

| Improvement | +194% | +55% | +26% |

The Scientist's Toolkit

Table 3: Essential research reagents and computational tools

| Category | Item | Function | Examples/Formats |

|---|---|---|---|

| Data Input | Metagenomic Reads | Raw sequencing data for assembly and coverage | FASTQ files (Illumina, PacBio, Nanopore) |

| Assembled Contigs | DNA fragments for binning analysis | FASTA format (>1,500 bp recommended) | |

| Software Tools | Read Mapper | Aligns reads to contigs for coverage calculation | BWA, Bowtie2, minimap2 |

| k-mer Counter | Calculates k-mer frequency distributions | Jellyfish, DSK | |

| Coverage Calculator | Generates coverage profiles across samples | CoverM, Fairy, jgisummarizebamcontigdepths | |

| Binning Algorithm | Groups contigs into MAGs using features | COMEBin, MetaBAT2, VAMB, SemiBin2 | |

| Quality Assessor | Evaluates completeness and contamination of MAGs | CheckM2 | |

| Computational | Multi-sample Coverage | Enables abundance-based binning improvement | BAM files or Fairy indices from multiple samples |

| Reference Databases | Provides taxonomic and functional context | GTDB, NCBI, KEGG, eggNOG |

Applications in Drug Discovery

The application of k-mer and coverage-based binning has significant implications for pharmaceutical research and therapeutic development:

Antibiotic Resistance Tracking: Multi-sample binning identifies 22-30% more potential antibiotic resistance gene hosts compared to single-sample approaches, enabling better tracking of resistance dissemination [7].

Natural Product Discovery: Binning recovers near-complete genomes containing biosynthetic gene clusters (BGCs) for novel antibiotic candidates. Multi-sample binning identifies 24-54% more potential BGCs from near-complete strains [7].

Pathogen Characterization: High-quality MAGs enable identification of potential pathogenic antibiotic-resistant bacteria (PARB). Advanced methods like COMEBin increase PARB identification by 33-75% compared to established tools [10].

Microbiome Therapeutics: Strain-resolved genomes facilitate understanding of microbial community dynamics in response to therapeutic interventions, supporting microbiome-based therapeutic development.

k-mer frequency and coverage profile analysis represents a powerful combination for metagenomic binning, each compensating for the limitations of the other. While k-mer frequencies provide stable taxonomic signatures, coverage profiles enable separation of genomes with similar composition but different abundance patterns. The integration of these features through modern computational approaches, particularly deep learning and multi-view representation learning, has significantly advanced genome recovery from complex microbial communities.

For pharmaceutical researchers, these methods enable more comprehensive mining of microbial diversity for drug discovery targets, particularly when applied to multi-sample datasets that capture abundance variation across conditions. As sequencing technologies evolve and computational methods mature, feature-based binning will continue to expand our access to the microbial dark matter, opening new avenues for therapeutic development.

Sequencing technologies have revolutionized biological research and clinical diagnostics, providing unprecedented insights into genomes, transcriptomes, and epigenomes. These technologies have evolved significantly from early sequencing methods to today's sophisticated platforms, which can be broadly categorized into short-read, long-read, and hybrid approaches [14]. In the specific context of metagenomic binning tools and computational methods research, the choice of sequencing technology directly influences the quality, contiguity, and completeness of recovered metagenome-assembled genomes (MAGs) [15] [7]. This application note provides a comprehensive overview of these sequencing methodologies, their performance characteristics, and detailed protocols for their application in metagenomic studies, particularly focusing on their impact on downstream binning processes and genome resolution.

Sequencing platforms differ fundamentally in their chemistry, read lengths, error profiles, and applications. Understanding these differences is crucial for selecting the appropriate technology for metagenomic binning projects, where the goal is to reconstruct high-quality genomes from complex microbial communities.

Table 1: Comparison of Major Sequencing Platforms

| Platform | Read Length | Accuracy | Throughput | Key Applications in Metagenomics |

|---|---|---|---|---|

| Illumina | 50-300 bp [16] [17] | >99.9% [18] | 16-3000 Gb per flow cell [18] | High-resolution SNP detection, microbial diversity, transcriptomics [19] [17] |

| PacBio HiFi | 10-25 kb [18] [20] | >99.9% (Q30) [14] | 15-35 Gb per SMRT Cell [18] | Closed genome assembly, repetitive region resolution, structural variant detection [14] [20] |

| Oxford Nanopore | 10-100+ kb [18] [14] | 87-98% (up to Q20 with latest chemistry) [18] [14] | 2-180 Gb per flow cell [18] | Real-time pathogen detection, epigenetic marker identification, complex region sequencing [19] [14] |

Table 2: Impact of Sequencing Technology on Metagenomic Binning Outcomes

| Sequencing Approach | MQ MAGs Recovery* | NC MAGs Recovery* | HQ MAGs Recovery* | Advantages for Binning |

|---|---|---|---|---|

| Short-read only | 550-1328 [7] | 104-531 [7] | 30-34 [7] | Cost-effective for large cohorts, high base accuracy for polishing [20] |

| Long-read only | 796-1196 [7] | 123-191 [7] | 104-163 [7] | Improved contiguity, fewer collapsed repeats, better SV detection [20] |

| Hybrid Approaches | Superior to single-sample binning [7] | Superior to single-sample binning [7] | Superior to single-sample binning [7] | Combines accuracy with structural resolution, optimal cost-to-quality ratio [21] [20] |

Values represent ranges from benchmarking studies on marine datasets with 30 samples. MQ: Moderate Quality (completeness >50%, contamination <10%); NC: Near-Complete (completeness >90%, contamination <5%); HQ: High Quality (NC criteria plus presence of rRNA genes and tRNAs) [7].

Workflow and Experimental Protocols

Wet Laboratory Procedures

Sample Preparation and Nucleic Acid Extraction

Initiate the process with careful sample collection from the relevant environment (human gut, marine, soil, etc.). For metagenomic studies, maintain consistent collection conditions to preserve community structure. Extract high-molecular-weight DNA using kits designed to minimize shearing, such as the DNeasy PowerSoil Pro Kit for soil samples or MagAttract HMW DNA Kit for stool samples [15]. Assess DNA quality using spectrophotometry (A260/A280 ratio of ~1.8) and fluorometry, and confirm integrity via pulsed-field gel electrophoresis or Fragment Analyzer systems.

Library Preparation Protocols

Short-read Library Preparation (Illumina):

- Fragmentation: Fragment 1-100 ng DNA to 200-500 bp using acoustic shearing or enzymatic fragmentation.

- End Repair and A-tailing: Convert fragmented DNA to blunt ends using T4 DNA polymerase and Klenow fragment, then add a single A-base to 3' ends using Klenow exo-.

- Adapter Ligation: Ligate indexed adapters with T-overhangs using T4 DNA ligase.

- Library Amplification: Enrich adapter-ligated fragments with 4-8 cycles of PCR using high-fidelity DNA polymerase.

- Quality Control: Validate library size distribution using Bioanalyzer or TapeStation and quantify by qPCR [16] [17].

Long-read Library Preparation (PacBio):

- DNA Repair and Size Selection: Repair damaged DNA using PreCR DNA repair mix and size-select >10 kb fragments using BluePippin or SageELF systems.

- SMRTbell Library Construction: Ligate SMRTbell adapters to both ends of size-selected DNA using T4 DNA ligase, creating circular templates.

- Purification: Remove unligated adapters and linear fragments with exonuclease treatment.

- Primer Annealing and Polymerase Binding: Anneal sequencing primers to the SMRTbell template and bind polymerase enzyme.

- Quality Control: Assess library quality and quantity using Qubit and Fragment Analyzer [18] [14].

Long-read Library Preparation (Oxford Nanopore):

- DNA Repair and End-Prep: Repair DNA damage using NEBNext FFPE DNA Repair mix and prepare ends for adapter ligation using NEBNext Ultra II End Repair/dA-tailing Module.

- Adapter Ligation: Ligate native barcodes and sequencing adapters using NEB Blunt/TA Ligase Master Mix.

- Purification: Clean up ligation reaction using AMPure XP beads.

- Quality Control: Assess library quality using Qubit and Agilent TapeStation [18] [14].

Sequencing Run Setup

For Illumina platforms, normalize libraries to 4 nM and denature with 0.2 N NaOH before dilution to appropriate loading concentration (1.2-1.8 pM for MiSeq). For PacBio systems, dilute SMRTbell library to 0.5-1.0 nM and anneal sequencing primer before polymerase binding. For Nanopore, load 100-200 fmol of library onto primed R9.4.1 or R10.3 flow cells following manufacturer's instructions.

Bioinformatic Analysis Pipelines

The computational workflow for processing sequencing data involves multiple steps to convert raw data into assembled genomes suitable for downstream analysis.

Quality Control and Preprocessing

Short-read Data:

- Perform adapter trimming and quality filtering using Trimmomatic or Fastp with parameters: SLIDINGWINDOW:4:20, MINLEN:50.

- Remove host-derived reads (if applicable) by alignment to host reference genome using BWA or Bowtie2.

- Assess quality metrics with FastQC and MultiQC [19] [15].

Long-read Data:

- Conduct quality filtering and adapter removal using instrument-specific tools (Guppy for Nanopore, ccs for PacBio HiFi).

- Remove low-quality reads (Q-score <7 for Nanopore, read length <1000 bp).

- For Nanopore data, perform error correction using Canu or NextDenovo [18] [14].

Assembly and Binning Protocols

Short-read Assembly: Assemble quality-filtered reads using metaSPAdes with k-mer sizes 21,33,55,77,99,127 or MEGAHIT with minimum contig length of 1000 bp:

Long-read Assembly: Assemble long reads using Flye for Nanopore data or hifiasm for PacBio HiFi data:

Hybrid Assembly: Combine short and long reads using Opera-MS or MaSuRCA:

Metagenomic Binning: Execute binning on assembled contigs using COMEBin for short-read data, SemiBin2 for long-read data, or MetaBAT 2 for hybrid approaches:

Bin Refinement and Quality Assessment

Refine initial bins using MetaWRAP bin_refinement module:

Assess quality of refined bins using CheckM2 with lineage-specific workflow:

The Scientist's Toolkit

Table 3: Essential Research Reagents and Computational Tools

| Category | Item | Function | Example Products/Tools |

|---|---|---|---|

| Wet Lab Reagents | DNA Extraction Kits | High-molecular-weight DNA preservation | DNeasy PowerSoil Pro, MagAttract HMW DNA Kit |

| Library Preparation Kits | Platform-specific library construction | Illumina DNA Prep, SMRTbell Prep Kit 3.0, Ligation Sequencing Kit | |

| Quality Control Reagents | Nucleic acid quantification and quality assessment | Qubit dsDNA HS Assay, Agilent High Sensitivity DNA Kit | |

| Computational Tools | Quality Control | Raw data processing and filtering | FastQC, MultiQC, Fastp, Nanoplot |

| Assembly | Contig construction from reads | metaSPAdes, MEGAHIT, Flye, hifiasm | |

| Binning | MAG reconstruction from contigs | COMEBin, MetaBinner, SemiBin2, MetaBAT 2 | |

| Refinement | Bin quality improvement | MetaWRAP, DAS Tool, MAGScoT | |

| Quality Assessment | MAG completeness and contamination evaluation | CheckM2, BUSCO |

Advanced Applications in Metagenomic Research

Multi-Sample Binning Strategies

Multi-sample binning leverages co-abundance patterns across multiple metagenomic samples to significantly improve binning quality and recovery rates. This approach calculates coverage information across samples, enabling more accurate contig clustering based on abundance profiles [7]. Implementation requires coordinated analysis of multiple datasets from similar environments or time-series samples.

Protocol for Multi-Sample Binning:

- Perform individual assembly of each sample or co-assembly of all samples.

- Map reads from all samples back to assembled contigs using Bowtie2 or minimap2.

- Generate coverage profiles for each contig across all samples.

- Execute multi-sample binning using tools like VAMB or MetaBAT 2 with the coverage table.

- Refine resulting bins to remove cross-sample contaminants.

Benchmarking studies demonstrate that multi-sample binning recovers 125%, 54%, and 61% more moderate or higher quality MAGs compared to single-sample binning for short-read, long-read, and hybrid data, respectively [7].

Hybrid Sequencing for Complex Microbial Communities

Hybrid approaches combine short-read accuracy with long-read contiguity to overcome the limitations of either technology alone. This is particularly valuable for resolving complex microbial communities with high strain diversity or repetitive genomic regions [21] [20].

Implementation Framework:

- Experimental Design: Sequence each sample with both short-read (30x coverage) and long-read (15x coverage) platforms.

- Data Integration: Use hybrid assemblers like Opera-MS or MaSuRCA that natively support both data types.

- Error Correction: Polish long-read assemblies with high-accuracy short reads using Pilon or NextPolish.

- Validation: Assess assembly quality using consensus metrics (QV score >40), BUSCO completeness (>90%), and contamination rates (<5%).

Recent research demonstrates that shallow hybrid sequencing (15x ONT + 15x Illumina) combined with retrained DeepVariant models can match or surpass the germline variant detection accuracy of state-of-the-art single-technology methods, potentially reducing overall sequencing costs while enabling detection of large structural variations [21].

Emerging Trends and Future Directions

The field of sequencing technologies continues to evolve rapidly, with several promising developments on the horizon. Third-generation sequencing platforms are achieving higher accuracy through innovations such as PacBio's HiFi reads and Nanopore's duplex sequencing [14]. The integration of artificial intelligence and deep learning in base calling and variant detection is improving the accuracy of long-read technologies, with tools like DeepVariant now supporting hybrid data inputs [21]. Portable sequencing devices, particularly Nanopore's MinION, are enabling real-time metagenomic analysis in field and clinical settings, with applications in outbreak investigation and point-of-care diagnostics [14]. Single-cell metagenomics is emerging as a powerful complement to bulk sequencing, allowing resolution of individual microbial cells and rare community members without cultivation biases [15]. Finally, the integration of multi-omics data including metatranscriptomics, metaproteomics, and metabolomics with metagenomic sequencing provides a more comprehensive understanding of microbial community function and host-microbe interactions [19] [15].

As these technologies continue to mature and decrease in cost, their application in metagenomic studies will further expand our understanding of microbial diversity, function, and ecology across diverse environments from the human gut to global ecosystems.

Metagenomic binning is a fundamental computational process in microbiome research that involves grouping assembled genomic sequences (contigs) into metagenome-assembled genomes (MAGs) based on their sequence composition and abundance profiles [22] [5]. This process is crucial for reconstructing individual genomes from complex microbial communities without the need for cultivation. The performance and outcome of binning are significantly influenced by the chosen strategy for handling multiple sequencing samples. Researchers primarily employ three distinct binning modes: co-assembly, single-sample, and multi-sample binning, each with characteristic workflows and applications [22] [10].

The selection of an appropriate binning mode represents a critical methodological decision that directly impacts the quality and completeness of recovered MAGs, influencing subsequent biological interpretations. Benchmarking studies demonstrate that multi-sample binning exhibits optimal performance across short-read, long-read, and hybrid sequencing data, outperforming other modes in identifying near-complete strains containing potential biosynthetic gene clusters [22]. Understanding the technical nuances, advantages, and limitations of each approach is essential for designing effective metagenomic studies, particularly in pharmaceutical and clinical research where genome completeness directly impacts downstream analyses of antibiotic resistance genes and virulence factors [22] [23].

Technical Specifications of Binning Modes

Definition and Workflow Characteristics

Table 1: Technical Specifications of Metagenomic Binning Modes

| Binning Mode | Assembly Approach | Coverage Information | Computational Demand | Primary Applications |

|---|---|---|---|---|

| Co-assembly | All samples pooled and assembled together | Calculated across samples | High memory requirements for assembly | Leveraging co-abundance information across samples |

| Single-Sample | Each sample assembled independently | Calculated within single sample | Moderate, easily parallelized | Sample-specific variation analysis |

| Multi-Sample | Each sample assembled independently | Calculated across multiple samples | Time-consuming but scalable | Recovery of higher-quality MAGs |

Co-assembly binning initially combines all sequencing samples before assembly, with the resulting contigs binned using coverage information calculated across all samples [22]. This approach can leverage co-abundance information across the entire dataset but may result in inter-sample chimeric contigs and cannot retain sample-specific variations [22]. The assembly process in co-assembly mode requires substantial computational resources, particularly memory, as the entire metagenomic dataset must be processed simultaneously.

Single-sample binning involves assembling and binning each sample completely independently, without integrating information from other samples in the project [22]. While this approach preserves sample-specific characteristics and is computationally straightforward to parallelize, it often results in fragmented MAGs with lower completeness compared to multi-sample approaches due to limited sequencing depth per sample.

Multi-sample binning employs individual sample assemblies but calculates coverage information across all available samples during the binning process [22]. Although this method is more time-consuming than single-sample binning, it typically recovers higher-quality MAGs by exploiting abundance patterns across multiple conditions or time points [22]. The cross-sample coverage information provides a powerful signal for grouping contigs from the same genome, even when those contigs are only present in subsets of samples.

Comparative Performance Across Data Types

Table 2: Performance Comparison of Binning Modes Across Data Types

| Data Type | Best Performing Mode | Key Advantages | Recommended Binners |

|---|---|---|---|

| Short-read | Multi-sample | 125% average improvement in MQ MAGs vs single-sample | COMEBin, Binny, MetaBinner |

| Long-read | Multi-sample | 54% average improvement in NC MAGs vs single-sample | MetaBinner, COMEBin, SemiBin 2 |

| Hybrid | Multi-sample | 61% average improvement in HQ MAGs vs single-sample | COMEBin, Binny, MetaBinner |

| Co-assembly | Co-assembly (when appropriate) | Effective for closely related communities | Binny, SemiBin 2, MetaBinner |

Benchmarking studies across diverse datasets reveal that multi-sample binning consistently outperforms other approaches regardless of sequencing technology. For marine short-read data, multi-sample binning demonstrates an average improvement of 125% in recovering moderate or higher quality (MQ) MAGs compared to single-sample binning [22]. Similar advantages are observed for long-read data (54% improvement in near-complete MAGs) and hybrid sequencing approaches (61% improvement in high-quality MAGs) [22].

The superior performance of multi-sample binning extends to functional applications, with this approach demonstrating remarkable superiority in identifying potential antibiotic resistance gene hosts and near-complete strains containing potential biosynthetic gene clusters across diverse data types [22]. Multi-sample binning identified 30% more antibiotic resistance gene hosts compared to single-sample approaches in benchmark studies [22].

Experimental Protocols

Protocol for Multi-Sample Binning with Fairy

Multi-sample binning, while highly effective, traditionally requires computationally intensive all-to-all read alignments. The Fairy package provides a fast k-mer-based alignment-free method that significantly accelerates this process while maintaining accuracy [13].

Step 1: Sample Preparation and Quality Control

- Extract high-molecular-weight DNA from environmental samples using standardized kits (e.g., PowerSoil for soil samples, DNeasy Blood and Tissue for water samples) [24]

- Perform quality assessment using fluorometric quantification and fragment analysis

- Prepare sequencing libraries compatible with your platform (Illumina, PacBio, or Nanopore)

Step 2: Sequencing and Assembly

- Sequence each sample individually using your preferred technology

- Assemble each sample independently using an appropriate assembler:

- For short-read data: MEGAHIT or SPAdes

- For long-read data: metaFlye [13]

- For hybrid approaches: operational-specific hybrid assemblers

- Quality filter contigs based on length (typically > 1,000 bp) and remove potential contaminants

Step 3: Fairy Coverage Calculation

- Install fairy from GitHub:

https://github.com/bluenote-1577/fairy - Process reads into k-mer hash tables for each sample:

- Compute approximate coverage for all contigs across all samples:

- Fairy uses FracMinHash to sparsely sample k-mers (approximately 1/50 k-mers) and calculates containment ANI to determine species presence (default threshold: 95%) [13]

Step 4: Binning with Preferred Tool

- Utilize coverage table from fairy with compatible binners:

Step 5: Quality Assessment and Refinement

- Assess MAG quality using CheckM2 for completeness and contamination estimates [22]

- Perform bin refinement using tools like MetaWRAP, DAS Tool, or MAGScoT to generate consensus bins [22]

- For MetaWRAP refinement:

Protocol for Evaluation of Binning Performance

Step 1: Quantitative Assessment with CheckM2

- Install CheckM2:

pip install checkm2 - Run quality assessment:

checkm2 predict --input bins_dir --output-directory checkm2_results - Interpret results: MAGs with >50% completeness and <5% contamination typically pass initial quality thresholds for moderate quality, while >90% completeness and <5% contamination defines near-complete MAGs [22]

Step 2: Functional Annotation

- Annotate MAGs with antibiotic resistance genes using tools like DeepARG or CARD

- Identify biosynthetic gene clusters with antiSMASH or PRISM

- Perform taxonomic classification with GTDB-Tk

Step 3: Comparative Analysis

- Compare binning modes by counting recovered HQ MAGs across approaches

- Assess strain-level diversity using dRep dereplication

- Evaluate functional capacity through KEGG pathway completeness

Comparative Workflow of Three Binning Modes

The Scientist's Toolkit

Essential Research Reagent Solutions

Table 3: Key Research Reagents and Computational Tools for Metagenomic Binning

| Category | Item | Specification/Version | Primary Function |

|---|---|---|---|

| DNA Extraction | PowerSoil Kit (Qiagen) | Commercial kit | Metagenomic DNA extraction from soil samples |

| DNA Extraction | DNeasy Blood and Tissue Kit (Qiagen) | Commercial kit | Metagenomic DNA extraction from water samples |

| Assembly | MEGAHIT | v1.2.9 | Short-read metagenomic assembly |

| Assembly | metaFlye | v2.9+ | Long-read metagenomic assembly |

| Binning | MetaBAT 2 | v2.15 | Efficient binning with tetranucleotide frequency |

| Binning | COMEBin | Latest | Contrastive multi-view representation learning |

| Binning | SemiBin 2 | v2.0+ | Semi-supervised deep learning binning |

| Coverage | Fairy | Latest | Fast approximate multi-sample coverage |

| Quality | CheckM2 | Latest | MAG quality assessment |

| Refinement | MetaWRAP | v1.3+ | Bin refinement and consensus generation |

Implementation Considerations

Computational Resource Requirements Metagenomic binning requires substantial computational resources, particularly for large multi-sample projects. For a typical 50-sample soil metagenome study with short-read data, researchers should allocate:

- Storage: 1-2 TB for raw reads, assemblies, and intermediate files

- Memory: 128-512 GB RAM for assembly and binning processes

- Processing: Multi-core systems (32+ cores) for parallel processing

Best Practices for Tool Selection

- For projects with limited computational resources: MetaBAT 2, VAMB, and MetaDecoder offer excellent scalability [22]

- For maximum binning quality: COMEBin and MetaBinner consistently rank as top performers across multiple data-binning combinations [22] [10]

- For hybrid or long-read data: SemiBin 2 and MetaBinner provide specialized capabilities for complex data types [22]

Advanced Applications in Pharmaceutical Development

Metagenomic binning plays a crucial role in pharmaceutical development by enabling the discovery of novel bioactive compounds and understanding drug-microbiome interactions. High-quality MAGs recovered through advanced binning approaches facilitate several key applications:

Antibiotic Resistance Monitoring Multi-sample binning demonstrates remarkable superiority in identifying potential antibiotic resistance gene hosts, recovering 30% more hosts compared to single-sample approaches [22]. This capability is critical for tracking the spread of antimicrobial resistance (AMR) in clinical and environmental settings. The CDC estimates 2.8 million drug-resistant infections occur annually in the United States, highlighting the urgent need for improved AMR surveillance [23].

Drug Discovery from Unculturable Microbes Metagenomic approaches allow researchers to access the genetic potential of the approximately 99% of microorganisms that cannot be cultured using traditional methods [24]. This has led to the discovery of novel therapeutic compounds, such as teixobactin, a novel antibiotic produced by a previously undescribed soil microorganism that shows efficacy against methicillin-resistant Staphylococcus aureus (MRSA) [23].

Microbiome-Drug Interactions Binning-derived MAGs enable researchers to understand how microbial communities influence drug efficacy and metabolism. For example, studies have revealed that the gut microbe Enterococcus durans can enhance reactive oxygen species-based treatments for colorectal cancer, while Eggerthella lenta can metabolize digoxin, rendering the heart medication ineffective [23].

Pharmaceutical Applications of Metagenomic Binning

Metagenomic binning represents a critical computational step in unlocking the genetic potential of microbial communities. The selection of appropriate binning modes—co-assembly, single-sample, or multi-sample—significantly impacts the quality and completeness of recovered MAGs, with multi-sample approaches consistently demonstrating superior performance across diverse sequencing technologies and sample types [22].

For pharmaceutical researchers and drug development professionals, implementing optimized multi-sample binning protocols with tools like COMEBin, MetaBinner, and Fairy enables more comprehensive discovery of novel therapeutic compounds, enhanced monitoring of antibiotic resistance dissemination, and deeper understanding of drug-microbiome interactions [22] [10] [13]. As metagenomic methodologies continue to advance, the integration of these binning strategies will play an increasingly vital role in translating microbial diversity into pharmaceutical innovation.

The Critical Role of Binning in Exploring Microbial Dark Matter

Microbial Dark Matter (MDM) represents the vast fraction of microorganisms in environmental samples that cannot be cultivated using standard laboratory techniques, and thus have not been characterized [25] [26]. It is estimated that 60-99% of microbial diversity falls into this category, comprising potentially >1,500 bacterial phyla, the majority of which are known only as "candidate phyla" [25] [26]. These uncultured microbes play crucial but unexplored roles in ecosystem processes, including biogeochemical cycling, and are a potential source of novel genes and metabolic pathways [27] [25].

Metagenomic binning is a cornerstone computational method that enables researchers to investigate this MDM. It is a culture-free approach that groups, or "bins," assembled DNA sequences (contigs) from a metagenome into clusters representing individual taxonomic groups, such as species or genera [7] [28]. This process allows for the recovery of Metagenome-Assembled Genomes (MAGs), effectively drafting genomes of uncultured organisms directly from environmental sequence data [7]. Without binning, the sequences belonging to these unknown organisms often remain as unclassified data points, obscuring a true picture of microbial diversity and function [26].

Core Features and Computational Methods in Metagenomic Binning

The process of binning is fundamentally a clustering problem that relies on distinguishing features inherent to sequences from the same genome. The table below summarizes the primary features used by binning tools.

Table 1: Key Features Used in Metagenomic Binning

| Feature Category | Description | Examples of Use |

|---|---|---|

| Nucleotide Composition | Uses frequencies of short DNA sequences (k-mers). Assumes each genome has a unique sequence "signature." | Tetranucleotide (4-mer) frequencies are the most popular, as used by CONCOCT, MaxBin 2, and MetaBAT 2 [7] [28]. |

| Sequence Abundance | Leverages the coverage (read depth) of contigs. Sequences from the same organism should have similar abundance across samples. | Essential for differentiating closely related strains; used by MaxBin 2 and VAMB [7] [28]. |

| Graph Structures & Biological Info | Utilizes assembly graphs, chromosome conformation, and the presence of marker genes. | SemiBin uses must-link and cannot-link constraints; Hi-C data helps in phasing haplotypes and scaffolding [7] [29] [30]. |

Modern binning tools increasingly use machine learning and deep learning models to integrate these features. For instance:

- VAMB employs a variational autoencoder to integrate tetranucleotide frequency and abundance data into a robust latent representation for clustering [7].

- COMEBin uses contrastive learning on multiple data-augmented views of each contig to produce high-quality embeddings [7].

- SemiBin applies semi-supervised learning with siamese neural networks to leverage biological constraints between contigs [7].

The performance of binning tools varies significantly based on the type of sequencing data and the binning strategy employed. A 2025 benchmark of 13 binning tools across seven different "data-binning combinations" provides critical insights for selecting the right tool [7].

Binning is performed in three primary modes:

- Single-sample binning: Assembly and binning are performed on individual samples.

- Co-assembly binning: All samples are assembled together, and the resulting contigs are binned using coverage information across samples.

- Multi-sample binning: Samples are assembled individually, but coverage information across all samples is used during the binning process [7].

Table 2: Performance of Binning Modes in Recovering High-Quality MAGs from a Marine Dataset (30 Samples)

| Binning Mode | Data Type | Moderate Quality MAGs* (Completeness >50%, Contamination <10%) | Near-Complete MAGs (Completeness >90%, Contamination <5%) | High-Quality MAGs (Near-Complete + rRNAs & tRNAs) |

|---|---|---|---|---|

| Multi-sample | Short-read | 1101 | 306 | 62 |

| Single-sample | Short-read | 550 | 104 | 34 |

| Multi-sample | Long-read | 1196 | 191 | 163 |

| Single-sample | Long-read | 796 | 123 | 104 |

| Multi-sample | Hybrid | Information missing in source | Information missing in source | Information missing in source |

| Single-sample | Hybrid | Information missing in source | Information missing in source | Information missing in source |

| Also referred to as "moderate or higher" quality (MQ) MAGs [7]. |

The data demonstrates that multi-sample binning substantially outperforms single-sample binning, particularly as the number of samples increases. In the marine short-read dataset, multi-sample binning recovered 100% more moderate-quality MAGs and 194% more near-complete MAGs [7]. This superiority extends to functional potential, with multi-sample binning identifying 30% more potential antibiotic resistance gene (ARG) hosts and 54% more potential biosynthetic gene clusters (BGCs) from near-complete strains in short-read data [7].

Table 3: Top-Performing Binning Tools Across Different Data-Binning Combinations

| Data-Binning Combination | Top-Performing Tools (In Order of Performance) |

|---|---|

| Short-read & Multi-sample | 1. COMEBin, 2. MetaBinner, 3. VAMB |

| Short-read & Co-assembly | 1. Binny, 2. COMEBin, 3. MetaBinner |

| Long-read & Multi-sample | 1. MetaBinner, 2. COMEBin, 3. SemiBin 2 |

| Long-read & Single-sample | 1. COMEBin, 2. SemiBin 2, 3. MetaBinner |

| Hybrid & Multi-sample | 1. MetaBinner, 2. COMEBin, 3. SemiBin 2 |

| Hybrid & Single-sample | 1. COMEBin, 2. MetaBinner, 3. VAMB |

| Based on benchmark results from [7]. Tools like MetaBAT 2, VAMB, and MetaDecoder were also highlighted for their excellent scalability. |

Application Notes: A Protocol for Investigating Microbial Dark Matter

The following protocol outlines a methodology for extracting and validating genomes from Microbial Dark Matter, based on recent research [26].

Sample Collection and DNA Extraction

- Sample Diversity: Collect biomass from diverse environments to maximize the chance of discovering novel MDM. Examples include extreme environments (hypersaline lakes), engineered systems (wastewater bioreactors), and host-associated niches [27] [26].

- Replication: Process samples in triplicate to account for heterogeneity.

- DNA Extraction: Use a standardized, high-yield kit for total genomic DNA (gDNA) extraction. The integrity of the gDNA should be verified via gel electrophoresis or similar methods before sequencing [26].

Sequencing and Assembly

- Sequencing Strategy: Employ both 16S rRNA gene amplicon sequencing (e.g., targeting the V4 region with Illumina) and shotgun metagenomics. For comprehensive MAG recovery, use a combination of sequencing technologies.

- Short-read (Illumina): Provides high accuracy for gene discovery and abundance profiling [28].

- Long-read (PacBio HiFi, Oxford Nanopore): Essential for resolving repetitive regions and producing more complete contigs, which greatly improves binning accuracy [7] [30]. A benchmark study showed that HiFi sequencing produces assemblies with fewer phase switches and better resolves low-heterozygosity regions compared to Nanopore [30].

- Metagenome Assembly: Assemble quality-filtered reads using specialized metagenome assemblers like metaSPAdes for short-reads or metaFlye for long-reads [28].

Binning and MAG Refinement

- Binning Execution: Run multiple high-performing binning tools from Table 3 (e.g., COMEBin, MetaBinner) on the assembled contigs. It is recommended to use both multi-sample and single-sample binning modes if multiple samples are available.

- Bin Refinement: Use a bin refinement tool such as MetaWRAP, DAS Tool, or MAGScoT to consolidate the results from multiple binners. This step produces a final set of MAGs that is superior to those generated by any single tool [7].

- Quality Assessment: Assess the completeness and contamination of MAGs using CheckM2. Define quality tiers:

- Near-complete (NC): >90% completeness, <5% contamination.

- High-quality (HQ): NC criteria, plus the presence of 5S, 16S, and 23S rRNA genes and at least 18 tRNAs [7].

Validation and Analysis of Dark Matter Sequences

- MDMS Validation: Identify "Microbial Dark Matter Sequences" (MDMS)—16S rRNA gene sequences that do not align to reference databases. Validate their existence by specific PCR amplification and re-sequencing of the original gDNA [26].

- Phylogenetic Placement: Align the validated MDMS to a comprehensive database like the Genome Taxonomy Database (GTDB) to build phylogenetic trees. This can reveal potentially new candidate phyla and other deep-branching lineages [26].

- Functional Annotation: Annotate the refined, non-redundant MAGs for genes of interest, such as Antibiotic Resistance Genes (ARGs) and Biosynthetic Gene Clusters (BGCs), to hypothesize the ecological role of the newly discovered MDM [7] [27].

Diagram 1: MDM Investigation Workflow. The process from sample collection to functional analysis, with a quality feedback loop.

Table 4: Key Research Reagents and Computational Tools for Metagenomic Binning

| Category / Item | Function / Application | Specific Examples / Notes |

|---|---|---|

| Sequencing Technologies | ||

| Illumina Short-read | High-accuracy sequencing for abundance profiling and contig coverage calculation. | Standard for 16S amplicon and shotgun sequencing [28]. |

| PacBio HiFi Long-read | Generates long reads (>10 kb) with high accuracy (>99.9%); improves assembly continuity. | Superior for phasing and resolving complex regions compared to Nanopore in some benchmarks [7] [30]. |

| Oxford Nanopore Long-read | Portable sequencing; produces very long reads (10-100+ kb) ideal for scaffolding. | Requires polishing; higher error rate than HiFi but longer read lengths possible [30]. |

| Bioinformatics Tools | ||

| Metagenome Assemblers | Assembles raw sequencing reads into longer contigs. | metaSPAdes (short-read), metaFlye (long-read) [28]. |

| Binning Software | Clusters contigs into Metagenome-Assembled Genomes (MAGs). | COMEBin, MetaBinner, VAMB, SemiBin 2 [7]. |

| Bin Refinement Tools | Consolidates bins from multiple tools to produce superior MAGs. | MetaWRAP (best overall), MAGScoT (excellent scalability) [7]. |

| Quality Assessment | Evaluates completeness and contamination of MAGs. | CheckM2 [7]. |

| Reference Databases | ||

| Genome Taxonomy Database (GTDB) | A standardized microbial taxonomy for phylogenetic placement of MAGs and MDMS. | Critical for classifying novel lineages [26]. |

Diagram 2: The Binning Process. Contigs are characterized by composition and abundance features, which are integrated by machine learning models before final clustering into MAGs.

Metagenomic binning has proven to be an indispensable computational technique for illuminating Microbial Dark Matter, transforming unknown sequence data into draft genomes that reveal new lineages and metabolic capabilities. The continued development of sophisticated binning tools, especially those leveraging multi-sample information and deep learning, is dramatically increasing the recovery of high-quality MAGs from complex environments. By following standardized protocols and leveraging benchmarked tools, researchers can systematically explore the functional potential of uncultured microbes, driving discoveries in fields ranging from ecology and evolution to drug discovery and biotechnology.

A Methodological Deep Dive: From Classical Algorithms to Modern Deep Learning

Metagenomic binning is a fundamental computational process in microbial ecology that involves grouping assembled genomic sequences (contigs) into discrete units representing individual microbial populations, known as Metagenome-Assembled Genomes (MAGs). This process enables researchers to reconstruct genomes directly from environmental samples without cultivation, thereby providing insights into the functional capabilities and ecological roles of uncultivated microorganisms [7] [22]. Classical binning tools primarily utilize unsupervised approaches that leverage sequence composition and coverage profile information to distinguish between genomes from different taxa [31] [5]. Among these classical tools, MetaBAT 2, MaxBin 2, and CONCOCT represent three widely adopted algorithms that have demonstrated utility in large-scale metagenomic studies [32] [7].

These tools operate on the principle that genomes from the same taxonomic group share similar sequence compositional characteristics, such as tetranucleotide frequencies, while also exhibiting coherent coverage profiles across multiple samples [5]. Despite their shared overall objective, each algorithm employs distinct computational strategies and mathematical models to achieve binning, resulting in complementary strengths and performance characteristics. The continued relevance of these established tools is evidenced by their inclusion in contemporary benchmarking studies and refinement pipelines, where they often serve as foundational components that can be further improved through ensemble approaches [7] [31].

Algorithmic Approaches and Methodologies

MetaBAT 2: Adaptive Binning Through Graph-Based Clustering

MetaBAT 2 employs an adaptive binning algorithm that eliminates the need for manual parameter tuning, which was a limitation in the original MetaBAT implementation [32] [33]. The algorithm utilizes tetranucleotide frequency (TNF) and abundance (coverage) profiles to calculate pairwise similarities between contigs. These similarities are integrated through a novel normalization approach where TNF scores are quantile-normalized using the abundance score distribution [32] [33]. A composite similarity score (S) is calculated as the geometric mean of the normalized TNF and abundance scores, with dynamic weighting that increases the influence of abundance information when more samples are available [32] [33].

The core clustering mechanism in MetaBAT 2 utilizes a graph-based approach where contigs represent nodes and similarity scores define edge weights [32] [33]. Unlike the k-medoid clustering used in MetaBAT 1, MetaBAT 2 implements an iterative graph building and partitioning procedure using a modified label propagation algorithm (LPA) [32] [33]. This algorithm deterministically partitions the graph by processing edges in order of strength and uses Fisher's method to evaluate contig membership across multiple neighborhoods [32] [33]. Additionally, MetaBAT 2 includes a recruitment step for smaller contigs (1-2.5 kb) that are assigned to bins based on correlation with existing member contigs [32] [33].

Figure 1: MetaBAT 2 algorithmic workflow showing the sequence from input contigs to final MAG generation.

MaxBin 2: Expectation-Maximization Based Binning

MaxBin 2 employs an Expectation-Maximization (EM) algorithm to bin contigs based on tetranucleotide frequency and coverage information [7] [22]. The algorithm estimates the probability that a given contig belongs to a particular genome using these features [7] [22]. A key characteristic of MaxBin 2 is its use of an EM algorithm that iteratively refines bin assignments by maximizing the likelihood of the observed data [7] [22]. The tool also incorporates marker gene information to improve binning quality and determine the appropriate number of bins [5].

CONCOCT: Dimensionality Reduction and Gaussian Mixture Models

CONCOCT integrates sequence composition and coverage as contig features, then applies dimensionality reduction using Principal Component Analysis (PCA) to reduce the feature space [7] [22]. The reduced representations are then clustered using a Gaussian Mixture Model (GMM) [7] [22]. This approach allows CONCOCT to model the probability distribution of contigs in the reduced feature space and assign them to bins based on these probabilistic models [34] [7].

Performance Benchmarking and Comparative Analysis

Recovery of Quality Genomes Across Datasets

Recent comprehensive benchmarking evaluating 13 binning tools across multiple datasets and sequencing technologies provides insights into the comparative performance of these classical binners [7] [22]. The study evaluated performance across seven "data-binning combinations" involving short-read, long-read, and hybrid data under co-assembly, single-sample, and multi-sample binning modes [7] [22]. Quality standards were defined according to the Minimum Information about a Metagenome-Assembled Genome (MIMAG) standards, with "moderate or higher" quality (MQ) MAGs defined as those with >50% completeness and <10% contamination, near-complete (NC) MAGs as >90% completeness and <5% contamination, and high-quality (HQ) MAGs meeting NC criteria while also containing 23S, 16S, and 5S rRNA genes and at least 18 tRNAs [7].

Table 1: Performance Comparison of Classical Binners in Recovery of Quality MAGs

| Binnder | Rank in Short_Multi | Rank in Long_Multi | Rank in Hybrid_Multi | Efficient Binnder Classification | Key Strengths |

|---|---|---|---|---|---|

| MetaBAT 2 | Not in top 3 | Not in top 3 | Not in top 3 | Yes (Excellent scalability) | Computational efficiency, speed, robust with large datasets [32] [7] |

| MaxBin 2 | Not in top 3 | Not in top 3 | Not in top 3 | Not classified as efficient | Expectation-Maximization approach, uses marker genes [7] [5] |

| CONCOCT | Not in top 3 | Not in top 3 | Not in top 3 | Not classified as efficient | PCA dimensionality reduction, Gaussian Mixture Models [7] |

While the classical binners did not rank in the top three positions for the multi-sample binning modes in the 2025 benchmarking study, they remain relevant components in metagenomic analysis workflows [7]. MetaBAT 2 was specifically highlighted as an "efficient binner" due to its excellent scalability and computational efficiency [7]. The benchmarking demonstrated that multi-sample binning generally outperforms single-sample approaches, with multi-sample binning showing an average improvement of 125%, 54%, and 61% in recovery of MAGs compared to single-sample binning on marine short-read, long-read, and hybrid data, respectively [7].

Computational Efficiency and Scalability

MetaBAT 2 demonstrates notable computational efficiency, with the capability to bin a typical metagenome assembly in "only a few minutes on a single commodity workstation" [32] [33]. This efficiency is maintained even with large datasets containing millions of contigs, making it suitable for large-scale metagenomic studies [32] [33]. The software engineering optimizations implemented in MetaBAT 2 ensure that the increased algorithmic complexity does not compromise scalability [32] [33].

Table 2: Technical Specifications and Algorithmic Approaches

| Feature | MetaBAT 2 | MaxBin 2 | CONCOCT |

|---|---|---|---|

| Core Algorithm | Graph-based clustering with modified Label Propagation | Expectation-Maximization (EM) algorithm | PCA + Gaussian Mixture Model |

| Primary Features | Tetranucleotide frequency, coverage abundance, coverage correlation (multi-sample) | Tetranucleotide frequency, coverage abundance, marker genes | Tetranucleotide frequency, coverage abundance |

| Key Innovations | Adaptive parameter tuning, quantile normalization, small contig recruitment | Expectation-Maximization framework, marker gene integration | Dimensionality reduction, probabilistic clustering |

| Minimum Contig Length | 1,500 bp (default) [31] | 1,000 bp (default) [31] | Information not available in search results |

| Multi-Sample Support | Yes, with coverage correlation [32] [33] | Information not available in search results | Information not available in search results |

Detailed Experimental Protocols

Standard Binning Protocol with MetaBAT 2

Input Requirements: MetaBAT 2 requires two primary inputs: (1) assembled contigs in FASTA format, and (2) read alignment files in BAM format providing coverage information [5]. The contigs file should contain the assembled sequences from metagenomic data, typically generated using assemblers such as MEGAHIT, metaSPAdes, or IDBA-UD [5]. The BAM files should contain read alignments to these contigs, which can be generated using mapping tools such as Bowtie2 or BWA [5].

Step-by-Step Procedure:

- Coverage Profiling: Calculate coverage information for each contig across all samples. This can be achieved using the

jgi_summarize_bam_contig_depthsutility included with MetaBAT 2, which processes BAM files to generate a coverage table [5]. - Binning Execution: Run MetaBAT 2 with the command:

metabat2 -i [contigs.fasta] -a [depth.txt] -o [bin_dir/bin][5]. - Parameter Optimization (Optional): While MetaBAT 2 uses adaptive parameter tuning, users can adjust minimum contig length (default: 1500bp) and other parameters for specific applications [31] [5].

- Output Interpretation: MetaBAT 2 generates FASTA files for each bin, with each file representing a putative MAG [5].

Quality Assessment and Validation

CheckM Analysis: Assess the completeness and contamination of generated MAGs using CheckM or CheckM2 [7] [5]. The standard approach involves:

- Run

checkm lineage_wf [bin_dir] [output_dir]to analyze bin quality [5]. - Interpret results using the completeness and contamination metrics, with thresholds of >50% completeness and <10% contamination for moderate quality, and >90% completeness and <5% contamination for near-complete MAGs [7].

Taxonomic Classification: Assign taxonomic labels to MAGs using tools such as GTDB-Tk for phylogenetic placement [5].

Functional Annotation: Annotate MAGs with functional information using tools like Prokka or DRAM to predict genes and metabolic pathways [5].

Table 3: Essential Computational Tools and Resources for Metagenomic Binning

| Tool/Resource | Category | Function | Application Notes |

|---|---|---|---|

| CheckM2 | Quality Assessment | Evaluates completeness and contamination of MAGs | Essential for benchmarking binning quality; uses lineage-specific marker genes [7] |

| Bowtie2/BWA | Read Mapping | Aligns sequencing reads to contigs | Generates BAM files for coverage profiling in MetaBAT 2 [5] |

| metaSPAdes/MEGAHIT | Assembly | Assembles reads into contigs | Provides input contigs for binning process [31] [5] |

| GTDB-Tk | Taxonomic Classification | Assigns taxonomic labels to MAGs | Places genomes in standardized taxonomic framework [5] |

| MetaWRAP | Bin Refinement | Combines and refines bins from multiple tools | Can integrate results from MetaBAT 2, MaxBin 2, and CONCOCT [7] [22] |

Figure 2: Comprehensive metagenomic binning workflow from raw sequencing data to downstream analysis.

Integration in Modern Metagenomic Workflows

While newer binning tools have emerged, including deep learning approaches like VAMB, SemiBin 2, and COMEBin, classical binning tools remain relevant components in modern metagenomic analysis pipelines [7] [22]. These classical algorithms are frequently used in conjunction with newer methods through bin refinement tools such as MetaWRAP, DAS Tool, and MAGScoT, which combine the strengths of multiple binning approaches to reconstruct higher-quality MAGs [7] [22].

MetaBAT 2 specifically maintains utility as an efficient binner for large-scale datasets where computational efficiency is a priority [7]. The tool's scalability makes it particularly suitable for studies involving hundreds of samples or complex microbial communities [32] [7]. Furthermore, the conceptual frameworks established by these classical algorithms continue to influence the development of new methods, with many contemporary tools building upon the fundamental principles of sequence composition and coverage utilization pioneered by these earlier approaches [7] [8].

When selecting binning tools for metagenomic studies, researchers should consider factors including dataset size, available computational resources, number of samples, and sequencing technology. For multi-sample studies with adequate computational resources, ensemble approaches that combine multiple binners followed by refinement typically yield the highest quality MAGs [7]. In resource-constrained environments or with exceptionally large datasets, MetaBAT 2 provides a balance of reasonable accuracy and computational efficiency [32] [7].

Metagenomic binning represents a critical computational step in microbiome research, enabling the reconstruction of microbial genomes from complex environmental sequences by clustering contigs from the same or closely related organisms [35]. The advent of deep learning has revolutionized this field by providing powerful frameworks for integrating heterogeneous data types and generating robust contig representations. Autoencoders and contrastive learning have emerged as two dominant paradigms, offering complementary approaches to address the significant challenges of noise, data sparsity, and efficient feature integration that characterize metagenomic datasets [36] [35]. These methods have demonstrated remarkable capabilities in recovering near-complete genomes from diverse microbial habitats, thereby expanding our understanding of previously uncultivated microbial populations and their functional roles in environments ranging from the human gut to marine ecosystems [36] [10].

The fundamental challenge in metagenomic binning lies in effectively combining two primary types of features: sequence composition (typically represented as k-mer frequencies) and coverage profiles across multiple samples [10]. Traditional methods often struggled with the efficient integration of these heterogeneous information sources, leading to suboptimal genome recovery rates. Deep learning approaches address this limitation by learning latent representations that naturally fuse these feature types while being robust to the inherent noise and technical variations in metagenomic data [36] [10]. This has enabled significant improvements in the quantity and quality of recovered metagenome-assembled genomes (MAGs), with particular benefits for identifying novel microbial taxa and characterizing their functional potential.

Core Deep Learning Architectures and Their Applications

Autoencoder-Based Binning Methods

Autoencoder architectures have established themselves as foundational frameworks for metagenomic binning, with variational autoencoders (VAEs) and adversarial autoencoders (AAEs) representing the most significant advancements. VAMB pioneered the application of VAEs to metagenomic binning by employing an encoder that transforms input contig features into a latent distribution, followed by a decoder that samples from this distribution to reconstruct the input [37]. The key innovation was the regularization of the latent space using Kullback-Leibler divergence with respect to a Gaussian unit distribution, which enabled the model to learn continuous, cluster-friendly representations that integrated both tetranucleotide frequencies and coverage profiles [37].